1. Overview

1.概述

In this tutorial, we’ll discuss some of the design principles and patterns that have been established over time to build highly concurrent applications.

在本教程中,我们将讨论一些长期以来建立的设计原则和模式,以构建高并发的应用程序。

However, it’s worthwhile to note that designing a concurrent application is a wide and complex topic, and hence no tutorial can claim to be exhaustive in its treatment. What we’ll cover here are some of the popular tricks often employed!

然而,值得注意的是,设计一个并发的应用程序是一个广泛而复杂的话题,因此任何教程都不能声称它是详尽的处理。我们在这里要介绍的是一些经常使用的流行技巧

2. Basics of Concurrency

2.并发的基础知识

Before we proceed any further, let’s spend some time understanding the basics. To begin with, we must clarify our understanding of what do we call a concurrent program. We refer to a program being concurrent if multiple computations are happening at the same time.

在我们进一步行动之前,让我们花点时间了解一下基础知识。首先,我们必须澄清我们对什么叫并发程序的理解。我们把一个程序称为并发程序,如果多个计算在同一时间发生。

Now, note that we’ve mentioned computations happening at the same time — that is, they’re in progress at the same time. However, they may or may not be executing simultaneously. It’s important to understand the difference as simultaneously executing computations are referred to as parallel.

现在,请注意,我们已经提到了同时发生的计算–也就是说,它们是在同一时间进行的。然而,它们可能是同时执行的,也可能不是。了解其中的区别很重要,因为同时执行的计算被称为并行计算。

2.1. How to Create Concurrent Modules?

2.1.如何创建并发模块?

It’s important to understand how can we create concurrent modules. There are numerous options, but we’ll focus on two popular choices here:

重要的是要了解我们如何能够创建并发的模块。有许多选择,但我们将在这里集中讨论两个流行的选择。

- Process: A process is an instance of a running program that is isolated from other processes in the same machine. Each process on a machine has its own isolated time and space. Hence, it’s normally not possible to share memory between processes, and they must communicate by passing messages.

- Thread: A thread, on the other hand, is just a segment of a process. There can be multiple threads within a program sharing the same memory space. However, each thread has a unique stack and priority. A thread can be native (natively scheduled by the operating system) or green (scheduled by a runtime library).

2.2. How Do Concurrent Modules Interact?

2.2.并发模块如何相互作用?

It’s quite ideal if concurrent modules don’t have to communicate, but that’s often not the case. This gives rise to two models of concurrent programming:

如果并发模块不需要通信,那是相当理想的,但情况往往不是这样。这就产生了两种并发编程的模式。

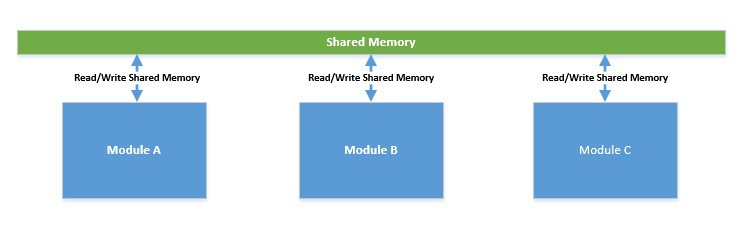

- Shared Memory: In this model, concurrent modules interact by reading and writing shared objects in the memory. This often leads to the interleaving of concurrent computations, causing race conditions. Hence, it can non-deterministically lead to incorrect states.

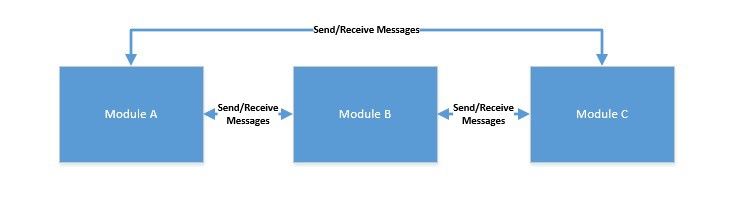

- Message Passing: In this model, concurrent modules interact by passing messages to each other through a communication channel. Here, each module processes incoming messages sequentially. Since there’s no shared state, it’s relatively easier to program, but this still isn’t free from race conditions!

2.3. How Do Concurrent Modules Execute?

2.3.并发模块如何执行?

It’s been a while since Moore’s Law hit a wall with respect to the clock speed of the processor. Instead, since we must grow, we’ve started to pack multiple processors onto the same chip, often called multicore processors. But still, it’s not common to hear about processors that have more than 32 cores.

自从摩尔定律在处理器的时钟速度方面碰壁以来,已经有一段时间了。相反,由于我们必须成长,我们已经开始将多个处理器打包到同一个芯片上,通常称为多核处理器。但是,仍然不常听到有超过32个内核的处理器。

Now, we know that a single core can execute only one thread, or set of instructions, at a time. However, the number of processes and threads can be in hundreds and thousands, respectively. So, how does it really work? This is where the operating system simulates concurrency for us. The operating system achieves this by time-slicing — which effectively means that the processor switches between threads frequently, unpredictably, and non-deterministically.

现在,我们知道,一个单核每次只能执行一个线程,或一组指令。然而,进程和线程的数量可以分别达到数百和数千。那么,它到底是如何工作的呢?这就是操作系统为我们模拟并发性的地方。操作系统通过时间切分来实现这一点–这实际上意味着处理器在线程之间频繁地、不可预测地、非确定性地切换。

3. Problems in Concurrent Programming

3.并发编程中的问题

As we go about discussing principles and patterns to design a concurrent application, it would be wise to first understand what the typical problems are.

当我们去讨论设计一个并发应用程序的原则和模式时,首先了解什么是典型的问题是明智的。

For a very large part, our experience with concurrent programming involves using native threads with shared memory. Hence, we will focus on some of the common problems that emanate from it:

在很大程度上,我们的并发编程经验涉及使用共享内存的本地线程。因此,我们将重点讨论由它产生的一些常见问题。

- Mutual Exclusion (Synchronization Primitives): Interleaving threads need to have exclusive access to shared state or memory to ensure the correctness of programs. The synchronization of shared resources is a popular method to achieve mutual exclusion. There are several synchronization primitives available to use — for example, a lock, monitor, semaphore, or mutex. However, programming for mutual exclusion is error-prone and can often lead to performance bottlenecks. There are several well-discussed issues related to this like deadlock and livelock.

- Context Switching (Heavyweight Threads): Every operating system has native, albeit varied, support for concurrent modules like process and thread. As discussed, one of the fundamental services that an operating system provides is scheduling threads to execute on a limited number of processors through time-slicing. Now, this effectively means that threads are frequently switched between different states. In the process, their current state needs to be saved and resumed. This is a time-consuming activity directly impacting the overall throughput.

4. Design Patterns for High Concurrency

4.高并发的设计模式

Now, that we understand the basics of concurrent programming and the common problems therein, it’s time to understand some of the common patterns for avoiding these problems. We must reiterate that concurrent programming is a difficult task that requires a lot of experience. Hence, following some of the established patterns can make the task easier.

现在,我们了解了并发编程的基础知识和其中的常见问题,现在是时候了解一些避免这些问题的常见模式了。我们必须重申,并发编程是一项困难的任务,需要大量的经验。因此,遵循一些既定的模式可以使任务更容易完成。

4.1. Actor-Based Concurrency

4.1.基于角色的并发性

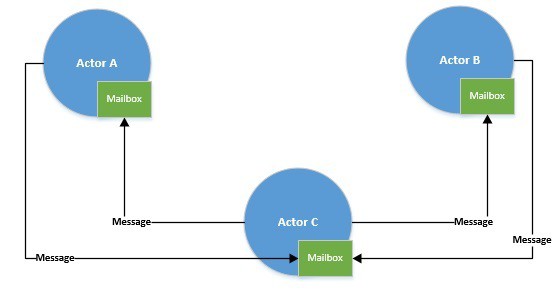

The first design we will discuss with respect to concurrent programming is called the Actor Model. This is a mathematical model of concurrent computation that basically treats everything as an actor. Actors can pass messages to each other and, in response to a message, can make local decisions. This was first proposed by Carl Hewitt and has inspired a number of programming languages.

我们将讨论的第一个关于并发编程的设计被称为 “演员模型”。这是一种并发计算的数学模型,基本上把所有的东西都当作一个演员。行为体可以相互传递消息,并且在响应消息时,可以做出局部决策。这是Carl Hewitt首次提出的,并启发了许多编程语言。

Scala’s primary construct for concurrent programming is actors. Actors are normal objects in Scala that we can create by instantiating the Actor class. Furthermore, the Scala Actors library provides many useful actor operations:

Scala用于并发编程的主要结构是演员。行为体是Scala中的普通对象,我们可以通过实例化Actor类来创建。此外,Scala Actors库提供了许多有用的actor操作。

class myActor extends Actor {

def act() {

while(true) {

receive {

// Perform some action

}

}

}

}In the example above, a call to the receive method inside an infinite loop suspends the actor until a message arrives. Upon arrival, the message is removed from the actor’s mailbox, and the necessary actions are taken.

在上面的例子中,对无限循环内的receive方法的调用使行为体暂停工作,直到有消息到达。到达后,该消息被从行为体的邮箱中移除,并采取必要的行动。

The actor model eliminates one of the fundamental problems with concurrent programming — shared memory. Actors communicate through messages, and each actor processes messages from its exclusive mailboxes sequentially. However, we execute actors over a thread pool. And we’ve seen that native threads can be heavyweight and, hence, limited in number.

行为体模型消除了并发编程的一个基本问题–共享内存。行为体通过消息进行通信,每个行为体按顺序处理来自其专属邮箱的消息。然而,我们在一个线程池上执行角色。我们已经看到,本地线程可能是重量级的,因此,数量有限。

There are, of course, other patterns that can help us here — we’ll cover those later!

当然,还有其他的模式可以在这里帮助我们–我们稍后会介绍这些。

4.2. Event-Based Concurrency

4.2.基于事件的并发性

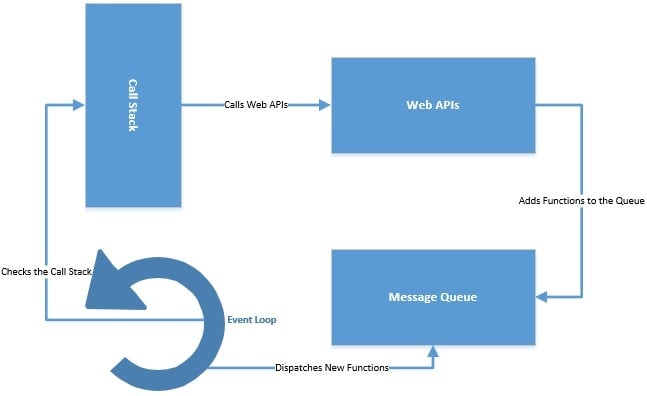

Event-based designs explicitly address the problem that native threads are costly to spawn and operate. One of the event-based designs is the event loop. The event loop works with an event provider and a set of event handlers. In this set-up, the event loop blocks on the event provider and dispatches an event to an event handler on arrival.

基于事件的设计明确地解决了本地线程的生成和运行成本高的问题。基于事件的设计之一是事件循环。事件循环与一个事件提供者和一组事件处理程序一起工作。在这种设置中,事件循环在事件提供者上阻塞,并在到达时将事件分派给事件处理程序。

Basically, the event loop is nothing but an event dispatcher! The event loop itself can be running on just a single native thread. So, what really transpires in an event loop? Let’s look at the pseudo-code of a really simple event loop for an example:

基本上,事件循环不过是一个事件调度器事件循环本身可以只在一个本地线程上运行。那么,在事件循环中到底发生了什么?让我们看一下一个真正简单的事件循环的伪代码,作为一个例子。

while(true) {

events = getEvents();

for(e in events)

processEvent(e);

}Basically, all our event loop is doing is to continuously look for events and, when events are found, process them. The approach is really simple, but it reaps the benefit of an event-driven design.

基本上,我们的事件循环所做的就是不断地寻找事件,当发现事件时,对其进行处理。这种方法真的很简单,但它获得了事件驱动设计的好处。

Building concurrent applications using this design gives more control to the application. Also, it eliminates some of the typical problems of the multi-threaded applications — for example, deadlock.

使用这种设计来构建并发应用程序,可以给应用程序更多的控制权。此外,它还消除了多线程应用程序的一些典型问题–例如,死锁。

JavaScript implements the event loop to offer asynchronous programming. It maintains a call stack to keep track of all the functions to execute. It also maintains an event queue for sending new functions for processing. The event loop constantly checks the call stack and adds new functions from the event queue. All async calls are dispatched to the web APIs, typically provided by the browser.

JavaScript实现了事件循环以提供异步编程。它维护着一个调用栈,以跟踪所有要执行的函数。它还维护一个事件队列,用于发送新的函数进行处理。事件循环不断检查调用栈,并从事件队列中添加新的函数。所有的异步调用都被派发到网络API,通常由浏览器提供。

The event loop itself can be running off a single thread, but the web APIs provide separate threads.

事件循环本身可以在单线程上运行,但网络API提供了单独的线程。

4.3. Non-Blocking Algorithms

4.3.非阻塞性算法

In non-blocking algorithms, suspension of one thread does not lead to suspension of other threads. We’ve seen that we can only have a limited number of native threads in our application. Now, an algorithm that blocks on a thread obviously brings down the throughput significantly and prevents us from building highly concurrent applications.

在非阻塞式算法中,一个线程的暂停不会导致其他线程的暂停。我们已经看到,我们的应用程序中只能有有限数量的本地线程。现在,在一个线程上阻塞的算法显然会大大降低吞吐量,并使我们无法构建高并发的应用程序。

Non-blocking algorithms invariably make use of the compare-and-swap atomic primitive that is provided by the underlying hardware. This means that the hardware will compare the contents of a memory location with a given value, and only if they are the same will it update the value to a new given value. This may look simple, but it effectively provides us an atomic operation that otherwise would require synchronization.

非阻塞算法总是利用底层硬件提供的比较和交换的原子原语。这意味着硬件会将一个内存位置的内容与一个给定值进行比较,只有当它们相同时才会将该值更新为一个新的给定值。这看起来很简单,但它有效地为我们提供了一个原子操作,否则就需要同步。

This means that we have to write new data structures and libraries that make use of this atomic operation. This has given us a huge set of wait-free and lock-free implementations in several languages. Java has several non-blocking data structures like AtomicBoolean, AtomicInteger, AtomicLong, and AtomicReference.

这意味着我们必须编写新的数据结构和库,以利用这种原子操作。这让我们在几种语言中获得了大量的无等待和无锁实现。Java有几个非阻塞数据结构,如AtomicBoolean、AtomicInteger、AtomicLong和AtomicReference。

Consider an application where multiple threads are trying to access the same code:

考虑一个应用程序,其中多个线程试图访问相同的代码。

boolean open = false;

if(!open) {

// Do Something

open=false;

}Clearly, the code above is not thread-safe, and its behavior in a multi-threaded environment can be unpredictable. Our options here are either to synchronize this piece of code with a lock or use an atomic operation:

显然,上面的代码不是线程安全的,它在多线程环境中的行为可能是不可预知的。我们在这里的选择是,要么用锁来同步这段代码,要么使用一个原子操作。

AtomicBoolean open = new AtomicBoolean(false);

if(open.compareAndSet(false, true) {

// Do Something

}As we can see, using a non-blocking data structure like AtomicBoolean helps us write thread-safe code without indulging in the drawbacks of locks!

正如我们所看到的,使用像AtomicBoolean这样的非阻塞数据结构可以帮助我们编写线程安全的代码,而不会沉溺于锁的缺点中

5. Support in Programming Languages

5.在编程语言中的支持

We’ve seen that there are multiple ways we can construct a concurrent module. While the programming language does make a difference, it’s mostly how the underlying operating system supports the concept. However, as thread-based concurrency supported by native threads are hitting new walls with respect to scalability, we always need new options.

我们已经看到,有多种方法可以构建一个并发模块。虽然编程语言确实有区别,但主要是底层操作系统如何支持这一概念。然而,由于由本地线程支持的基于线程的并发性在可扩展性方面遇到了新的障碍,我们总是需要新的选择。

Implementing some of the design practices we discussed in the last section do prove to be effective. However, we must keep in mind that it does complicate programming as such. What we truly need is something that provides the power of thread-based concurrency without the undesirable effects it brings.

实施我们在上一节中讨论的一些设计实践,确实被证明是有效的。然而,我们必须牢记,它确实使编程本身变得复杂。我们真正需要的是能够提供基于线程的并发性的力量,而没有它带来的不良影响的东西。

One solution available to us is green threads. Green threads are threads that are scheduled by the runtime library instead of being scheduled natively by the underlying operating system. While this doesn’t get rid of all the problems in thread-based concurrency, it certainly can give us better performance in some cases.

我们可用的一个解决方案是绿色线程。绿色线程是由运行时库调度的线程,而不是由底层操作系统自然调度。虽然这并不能摆脱基于线程的并发的所有问题,但在某些情况下,它肯定能给我们带来更好的性能。

Now, it’s not trivial to use green threads unless the programming language we choose to use supports it. Not every programming language has this built-in support. Also, what we loosely call green threads can be implemented in very unique ways by different programming languages. Let’s see some of these options available to us.

现在,使用绿色线程并非易事,除非我们选择的编程语言支持它。并非每种编程语言都有这种内置支持。而且,我们松散地称之为绿色线程的东西,可以由不同的编程语言以非常独特的方式实现。让我们来看看其中一些可供选择的方法。

5.1. Goroutines in Go

5.1.Go中的Goroutines

Goroutines in the Go programming language are light-weight threads. They offer functions or methods that can run concurrently with other functions or methods. Goroutines are extremely cheap as they occupy only a few kilobytes in stack size, to begin with.

围棋编程语言中的Goroutines是轻量级的线程。它们提供的函数或方法可以与其他函数或方法同时运行。Goroutines是极其便宜的,因为它们在堆栈中只占用几千字节的大小,开始时。

Most importantly, goroutines are multiplexed with a lesser number of native threads. Moreover, goroutines communicate with each other using channels, thereby avoiding access to shared memory. We get pretty much everything we need, and guess what — without doing anything!

最重要的是,goroutines与较少数量的本地线程进行复用。此外,goroutines之间使用通道进行通信,从而避免了对共享内存的访问。我们得到了几乎所有我们需要的东西,而且你猜怎么着–不用做任何事情!”。

5.2. Processes in Erlang

5.2.埃尔朗的进程

In Erlang, each thread of execution is called a process. But, it’s not quite like the process we’ve discussed so far! Erlang processes are light-weight with a small memory footprint and are fast to create and dispose of with low scheduling overhead.

在Erlang中,每个执行线程被称为一个进程。但是,它和我们目前讨论的进程不太一样!Erlang进程是轻量级的,内存占用小,创建和处置速度快,调度开销低。

Under the hood, Erlang processes are nothing but functions that the runtime handles scheduling for. Moreover, Erlang processes don’t share any data, and they communicate with each other by message passing. This is the reason why we call these “processes” in the first place!

在引擎盖下,Erlang进程只不过是运行时处理调度的函数。此外,Erlang进程不共享任何数据,它们之间通过消息传递进行通信。这就是为什么我们把这些进程称为 “进程 “的原因。

5.3. Fibers in Java (Proposal)

5.3.Java中的纤维(建议)

The story of concurrency with Java has been a continuous evolution. Java did have support for green threads, at least for Solaris operating systems, to begin with. However, this was discontinued due to hurdles beyond the scope of this tutorial.

Java的并发故事一直在不断演变。Java一开始确实支持绿色线程,至少对Solaris操作系统是这样。然而,由于存在超出本教程范围的障碍,这一点被停止了。

Since then, concurrency in Java is all about native threads and how to work with them smartly! But for obvious reasons, we may soon have a new concurrency abstraction in Java, called fiber. Project Loom proposes to introduce continuations together with fibers, which may change the way we write concurrent applications in Java!

从那时起,Java中的并发都是关于原生线程的,以及如何巧妙地使用它们!这是很重要的。但由于明显的原因,我们可能很快就会在Java中出现一个新的并发性抽象,称为纤维。Project Loom提议将连续性与纤维一起引入,这可能会改变我们在Java中编写并发应用程序的方式!

This is just a sneak peek of what is available in different programming languages. There are far more interesting ways other programming languages have tried to deal with concurrency.

这只是对不同编程语言中可用的东西的一个偷窥。其他编程语言还有更多有趣的方法来处理并发性问题。

Moreover, it’s worth noting that a combination of design patterns discussed in the last section, together with the programming language support for a green-thread-like abstraction, can be extremely powerful when designing highly concurrent applications.

此外,值得注意的是,上一节所讨论的设计模式的组合,加上编程语言对类似绿色线程的抽象的支持,在设计高并发的应用程序时,可能会非常强大。

6. High Concurrency Applications

6.高并发应用

A real-world application often has multiple components interacting with each other over the wire. We typically access it over the internet, and it consists of multiple services like proxy service, gateway, web service, database, directory service, and file systems.

一个现实世界的应用往往有多个组件在电线上相互作用。我们通常通过互联网访问它,它由多个服务组成,如代理服务、网关、网络服务、数据库、目录服务和文件系统。

How do we ensure high concurrency in such situations? Let’s explore some of these layers and the options we have for building a highly concurrent application.

在这种情况下,我们如何确保高并发性?让我们来探讨一下其中的一些层次,以及我们在构建高并发的应用程序时有哪些选择。

As we’ve seen in the previous section, the key to building high concurrency applications is to use some of the design concepts discussed there. We need to pick the right software for the job — those that already incorporate some of these practices.

正如我们在上一节中所看到的,构建高并发应用程序的关键是使用那里讨论的一些设计概念。我们需要为这项工作挑选合适的软件–那些已经包含了其中一些做法的软件。

6.1. Web Layer

6.1 网络层

The web is typically the first layer where user requests arrive, and provisioning for high concurrency is inevitable here. Let’s see what are some of the options:

网络通常是用户请求到达的第一层,在这里为高并发性做准备是不可避免的。让我们看看有哪些选择。

- Node (also called NodeJS or Node.js) is an open-source, cross-platform JavaScript runtime built on Chrome’s V8 JavaScript engine. Node works quite well in handling asynchronous I/O operations. The reason Node does it so well is because it implements an event loop over a single thread. The event loop with the help of callbacks handles all blocking operations like I/O asynchronously.

- nginx is an open-source web server that we use commonly as a reverse proxy among its other usages. The reason nginx provides high concurrency is that it uses an asynchronous, event-driven approach. nginx operates with a master process in a single thread. The master process maintains worker processes that do the actual processing. Hence, the worker processes process each request concurrently.

6.2. Application Layer

6.2.应用层

While designing an application, there are several tools to help us build for high concurrency. Let’s examine a few of these libraries and frameworks that are available to us:

在设计一个应用程序时,有几个工具可以帮助我们为高并发性而构建。让我们来研究一下这些可供使用的库和框架中的几个。

- Akka is a toolkit written in Scala for building highly concurrent and distributed applications on the JVM. Akka’s approach towards handling concurrency is based on the actor model we discussed earlier. Akka creates a layer between the actors and the underlying systems. The framework handles the complexities of creating and scheduling threads, receiving and dispatching messages.

- Project Reactor is a reactive library for building non-blocking applications on the JVM. It’s based on the Reactive Streams specification and focuses on efficient message passing and demand management (back-pressure). Reactor operators and schedulers can sustain high throughput rates for messages. Several popular frameworks provide reactor implementations, including Spring WebFlux and RSocket.

- Netty is an asynchronous, event-driven, network application framework. We can use Netty to develop highly concurrent protocol servers and clients. Netty leverages NIO, which is a collection of Java APIs that offers asynchronous data transfer through buffers and channels. It offers us several advantages like better throughput, lower latency, less resource consumption, and minimize unnecessary memory copy.

6.3. Data Layer

6.3.数据层

Finally, no application is complete without its data, and data comes from persistent storage. When we discuss high concurrency with respect to databases, most of the focus remains on the NoSQL family. This is primarily owing to linear scalability that NoSQL databases can offer but is hard to achieve in relational variants. Let’s look at two popular tools for the data layer:

最后,没有数据的应用是不完整的,而数据来自持久性存储。当我们讨论数据库的高并发性时,大部分的焦点仍然集中在NoSQL系列。这主要是由于NoSQL数据库可以提供线性可扩展性,但在关系型变体中很难实现。让我们看一下数据层的两个流行工具。

- Cassandra is a free and open-source NoSQL distributed database that provides high availability, high scalability, and fault-tolerance on commodity hardware. However, Cassandra does not provide ACID transactions spanning multiple tables. So if our application does not require strong consistency and transactions, we can benefit from Cassandra’s low-latency operations.

- Kafka is a distributed streaming platform. Kafka stores a stream of records in categories called topics. It can provide linear horizontal scalability for both producers and consumers of the records while, at the same time, providing high reliability and durability. Partitions, replicas, and brokers are some of the fundamental concepts on which it provides massively-distributed concurrency.

6.4. Cache Layer

6.4 缓存层

Well, no web application in the modern world that aims for high concurrency can afford to hit the database every time. That leaves us to choose a cache — preferably an in-memory cache that can support our highly concurrent applications:

好吧,在现代社会中,没有一个以高并发为目标的网络应用程序可以承受得起每次都去打数据库。这让我们不得不选择一个缓冲区–最好是一个能够支持我们的高并发应用的内存缓冲区。

- Hazelcast is a distributed, cloud-friendly, in-memory object store and compute engine that supports a wide variety of data structures such as Map, Set, List, MultiMap, RingBuffer, and HyperLogLog. It has built-in replication and offers high availability and automatic partitioning.

- Redis is an in-memory data structure store that we primarily use as a cache. It provides an in-memory key-value database with optional durability. The supported data structures include strings, hashes, lists, and sets. Redis has built-in replication and offers high availability and automatic partitioning. In case we do not need persistence, Redis can offer us a feature-rich, networked, in-memory cache with outstanding performance.

Of course, we’ve barely scratched the surface of what is available to us in our pursuit to build a highly concurrent application. It’s important to note that, more than available software, our requirement should guide us to create an appropriate design. Some of these options may be suitable, while others may not be appropriate.

当然,在我们追求建立一个高并发的应用程序的过程中,我们几乎没有触及可用的表面。值得注意的是,比起可用的软件,我们的要求应该指导我们创建一个合适的设计。其中一些选择可能是合适的,而另一些可能不合适。

And, let’s not forget that there are many more options available that may be better suited for our requirements.

而且,我们不要忘记,还有许多可供选择的方案,可能更适合我们的要求。

7. Conclusion

7.结语

In this article, we discussed the basics of concurrent programming. We understood some of the fundamental aspects of the concurrency and the problems it can lead to. Further, we went through some of the design patterns that can help us avoid the typical problems in concurrent programming.

在这篇文章中,我们讨论了并发式编程的基础知识。我们了解了并发的一些基本方面以及它可能导致的问题。此外,我们通过一些设计模式,可以帮助我们避免并发编程中的典型问题。

Finally, we went through some of the frameworks, libraries, and software available to us for building a highly-concurrent, end-to-end application.

最后,我们浏览了一些框架、库和软件,以建立一个高并发的、端到端的应用程序。