1. Overview

1.概述

In this tutorial, we’re going to learn about the LRU cache and take a look at an implementation in Java.

在本教程中,我们将学习LRU缓存,并看一下Java中的实现。

2. LRU Cache

2.LRU缓存

The Least Recently Used (LRU) cache is a cache eviction algorithm that organizes elements in order of use. In LRU, as the name suggests, the element that hasn’t been used for the longest time will be evicted from the cache.

最近使用最少的缓存(LRU)是一种按使用顺序组织元素的缓存驱逐算法。在LRU中,顾名思义,最长时间没有被使用的元素将被从缓存中驱逐出去。

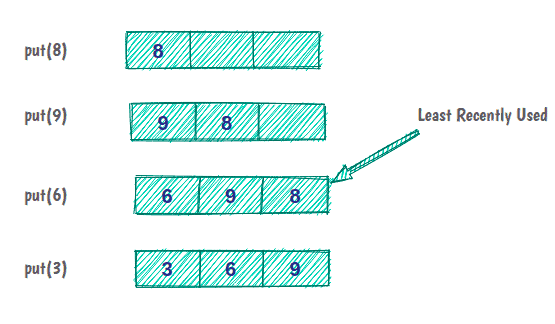

For example, if we have a cache with a capacity of three items:

例如,如果我们有一个容量为三个项目的缓存。

Initially, the cache is empty, and we put element 8 in the cache. Elements 9 and 6 are cached as before. But now, the cache capacity is full, and to put the next element, we have to evict the least recently used element in the cache.

最初,缓存是空的,我们把元素8放入缓存。元素9和6像以前一样被缓存起来。但是现在,缓存的容量已经满了,为了放入下一个元素,我们必须驱逐缓存中最近使用最少的元素。

Before we implement the LRU cache in Java, it’s good to know some aspects of the cache:

在我们用Java实现LRU缓存之前,最好先了解缓存的一些方面。

- All operations should run in order of O(1)

- The cache has a limited size

- It’s mandatory that all cache operations support concurrency

- If the cache is full, adding a new item must invoke the LRU strategy

2.1. Structure of an LRU Cache

2.1.LRU缓存的结构

Now, let’s think about a question that will help us in designing the cache.

现在,让我们思考一个问题,这将有助于我们设计缓存。

How can we design a data structure that could do operations like reading, sorting (temporal sorting), and deleting elements in constant time?

我们如何设计一种数据结构,可以在恒定的时间内完成读取、排序(时间排序)和删除元素等操作?

It seems that to find the answer to this question, we need to think deeply about what has been said about LRU cache and its features:

看来要找到这个问题的答案,我们需要深入思考关于LRU缓存的说法和它的特点。

- In practice, LRU cache is a kind of Queue — if an element is reaccessed, it goes to the end of the eviction order

- This queue will have a specific capacity as the cache has a limited size. Whenever a new element is brought in, it is added at the head of the queue. When eviction happens, it happens from the tail of the queue.

- Hitting data in the cache must be done in constant time, which isn’t possible in Queue! But, it is possible with Java’s HashMap data structure

- Removal of the least recently used element must be done in constant time, which means for the implementation of Queue, we’ll use DoublyLinkedList instead of SingleLinkedList or an array

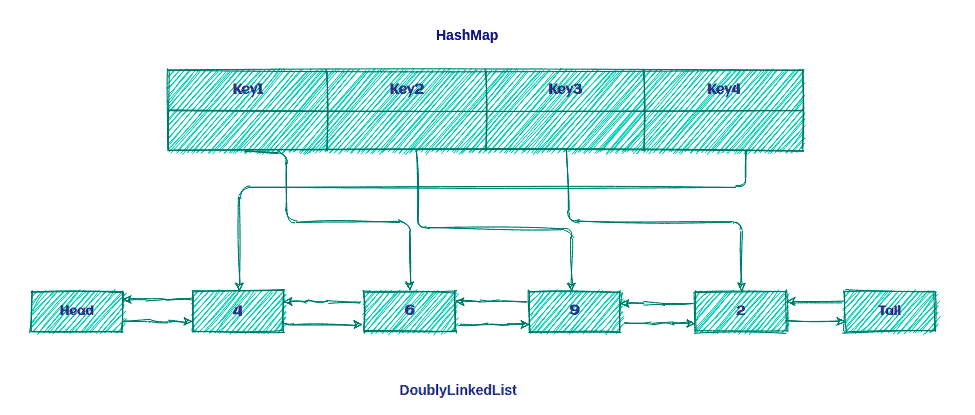

So, the LRU cache is nothing but a combination of the DoublyLinkedList and the HashMap as shown below:

因此,LRU缓存只不过是DoublyLinkedList和HashMap的组合,如下所示:。

The idea is to keep the keys on the Map for quick access to data within the Queue.

我们的想法是将键保存在Map上,以便快速访问Queue中的数据。

2.2. LRU Algorithm

2.2.LRU算法

The LRU algorithm is pretty easy! If the key is present in HashMap, it’s a cache hit; else, it’s a cache miss.

LRU算法是非常简单的!如果键存在于HashMap中,它是一个缓存命中;否则,它是一个缓存错过。

We’ll follow two steps after a cache miss occurs:

在发生缓存缺失后,我们将遵循两个步骤。

- Add a new element in front of the list.

- Add a new entry in HashMap and refer to the head of the list.

And, we’ll do two steps after a cache hit:

而且,我们将在缓存命中后做两个步骤。

- Remove the hit element and add it in front of the list.

- Update HashMap with a new reference to the front of the list.

Now, it’s time to see how we can implement LRU cache in Java!

现在,是时候看看我们如何在Java中实现LRU缓存了。

3. Implementation in Java

3.用Java实现

First, we’ll define our Cache interface:

首先,我们将定义我们的Cache接口。

public interface Cache<K, V> {

boolean set(K key, V value);

Optional<V> get(K key);

int size();

boolean isEmpty();

void clear();

}Now, we’ll define the LRUCache class that represents our cache:

现在,我们将定义LRUCache类,代表我们的缓存。

public class LRUCache<K, V> implements Cache<K, V> {

private int size;

private Map<K, LinkedListNode<CacheElement<K,V>>> linkedListNodeMap;

private DoublyLinkedList<CacheElement<K,V>> doublyLinkedList;

public LRUCache(int size) {

this.size = size;

this.linkedListNodeMap = new HashMap<>(maxSize);

this.doublyLinkedList = new DoublyLinkedList<>();

}

// rest of the implementation

}We can create an instance of the LRUCache with a specific size. In this implementation, we use HashMap collection for storing all references to LinkedListNode.

我们可以创建一个具有特定大小的LRUCache的实例。在这个实现中,我们使用HashMap集合来存储所有对LinkedListNode的引用。

Now, let’s discuss operations on our LRUCache.

现在,让我们讨论对我们的LRUCache的操作。

3.1. Put Operation

3.1.放置操作

The first one is the put method:

第一个是put方法。

public boolean put(K key, V value) {

CacheElement<K, V> item = new CacheElement<K, V>(key, value);

LinkedListNode<CacheElement<K, V>> newNode;

if (this.linkedListNodeMap.containsKey(key)) {

LinkedListNode<CacheElement<K, V>> node = this.linkedListNodeMap.get(key);

newNode = doublyLinkedList.updateAndMoveToFront(node, item);

} else {

if (this.size() >= this.size) {

this.evictElement();

}

newNode = this.doublyLinkedList.add(item);

}

if(newNode.isEmpty()) {

return false;

}

this.linkedListNodeMap.put(key, newNode);

return true;

}First, we find the key in the linkedListNodeMap that stores all keys/references. If the key exists, a cache hit happened, and it’s ready to retrieve CacheElement from the DoublyLinkedList and move it to the front.

首先,我们在存储所有键/引用的linkedListNodeMap中找到键。如果该键存在,就说明发生了缓存命中,并准备从DoublyLinkedList中检索CacheElement,并将其移到前面.。

After that, we update the linkedListNodeMap with a new reference and move it to the front of the list:

之后,我们用一个新的引用更新linkedListNodeMap,并将其移到列表的前面。

public LinkedListNode<T> updateAndMoveToFront(LinkedListNode<T> node, T newValue) {

if (node.isEmpty() || (this != (node.getListReference()))) {

return dummyNode;

}

detach(node);

add(newValue);

return head;

}First, we check that the node is not empty. Also, the reference of the node must be the same as the list. After that, we detach the node from the list and add newValue to the list.

首先,我们检查该节点是否为空。同时,节点的引用必须与列表相同。之后,我们从列表中删除节点并将newValue添加到列表中。

But if the key doesn’t exist, a cache miss happened, and we have to put a new key into the linkedListNodeMap. Before we can do that, we check the list size. If the list is full, we have to evict the least recently used element from the list.

但是如果这个键不存在,就发生了缓存缺失,我们必须把一个新的键放到linkedListNodeMap中。在这样做之前,我们要检查列表的大小。如果列表已满,我们必须从列表中剔除最近使用最少的元素。

3.2. Get Operation

3.2 获取操作

Let’s take a look at our get operation:

让我们看一下我们的get操作。

public Optional<V> get(K key) {

LinkedListNode<CacheElement<K, V>> linkedListNode = this.linkedListNodeMap.get(key);

if(linkedListNode != null && !linkedListNode.isEmpty()) {

linkedListNodeMap.put(key, this.doublyLinkedList.moveToFront(linkedListNode));

return Optional.of(linkedListNode.getElement().getValue());

}

return Optional.empty();

}As we can see above, this operation is straightforward. First, we get the node from the linkedListNodeMap and, after that, check that it’s not null or empty.

正如我们在上面看到的,这个操作很简单。首先,我们从linkedListNodeMap中获取节点,之后,检查它是否为空或空。

The rest of the operation is the same as before, with just one difference on the moveToFront method:

其余的操作与之前一样,只是在moveToFront方法上有一个区别。

public LinkedListNode<T> moveToFront(LinkedListNode<T> node) {

return node.isEmpty() ? dummyNode : updateAndMoveToFront(node, node.getElement());

}Now, let’s create some tests to verify that our cache works fine:

现在,让我们创建一些测试来验证我们的缓存工作是否正常。

@Test

public void addSomeDataToCache_WhenGetData_ThenIsEqualWithCacheElement(){

LRUCache<String,String> lruCache = new LRUCache<>(3);

lruCache.put("1","test1");

lruCache.put("2","test2");

lruCache.put("3","test3");

assertEquals("test1",lruCache.get("1").get());

assertEquals("test2",lruCache.get("2").get());

assertEquals("test3",lruCache.get("3").get());

}

Now, let’s test the eviction policy:

现在,让我们测试一下驱逐政策。

@Test

public void addDataToCacheToTheNumberOfSize_WhenAddOneMoreData_ThenLeastRecentlyDataWillEvict(){

LRUCache<String,String> lruCache = new LRUCache<>(3);

lruCache.put("1","test1");

lruCache.put("2","test2");

lruCache.put("3","test3");

lruCache.put("4","test4");

assertFalse(lruCache.get("1").isPresent());

}4. Dealing With Concurrency

4.处理并发问题

So far, we assumed that our cache was just used in a single-threaded environment.

到目前为止,我们假设我们的缓存只是在单线程环境下使用。

To make this container thread-safe, we need to synchronize all public methods. Let’s add a ReentrantReadWriteLock and ConcurrentHashMap into the previous implementation:

为了使这个容器成为线程安全的,我们需要同步所有的公共方法。让我们把ReentrantReadWriteLock和ConcurrentHashMap加入先前的实现中。

public class LRUCache<K, V> implements Cache<K, V> {

private int size;

private final Map<K, LinkedListNode<CacheElement<K,V>>> linkedListNodeMap;

private final DoublyLinkedList<CacheElement<K,V>> doublyLinkedList;

private final ReentrantReadWriteLock lock = new ReentrantReadWriteLock();

public LRUCache(int size) {

this.size = size;

this.linkedListNodeMap = new ConcurrentHashMap<>(size);

this.doublyLinkedList = new DoublyLinkedList<>();

}

// ...

}We prefer to use a reentrant read/write lock rather than declaring methods as synchronized because it gives us more flexibility in deciding when to use a lock on reading and writing.

我们更喜欢使用可重入的读写锁,而不是将方法声明为synchronized,因为它让我们在决定何时使用读写锁时有更大的灵活性。。

4.1. writeLock

4.1.writeLock

Now, let’s add a call to writeLock in our put method:

现在,让我们在put方法中添加对writeLock的调用。

public boolean put(K key, V value) {

this.lock.writeLock().lock();

try {

//..

} finally {

this.lock.writeLock().unlock();

}

}When we use writeLock on the resource, only the thread holding the lock can write to or read from the resource. So, all other threads that are either trying to read or write on the resource will have to wait until the current lock holder releases it.

当我们在资源上使用writeLock时,只有持有该锁的线程可以向该资源写或从该资源读。因此,所有其他试图在该资源上读或写的线程将不得不等待,直到当前的锁持有者释放它。

This is very important to prevent a deadlock. If any of the operations inside the try block fails, we still release the lock before exiting the function with a finally block at the end of the method.

这对于防止deadlock非常重要。如果try块内的任何操作失败,我们仍然会在方法结束时用finally块退出函数前释放锁。

One of the other operations that needs writeLock is evictElement, which we used in the put method:

其他需要writeLock的操作之一是evictElement,我们在put方法中使用它。

private boolean evictElement() {

this.lock.writeLock().lock();

try {

//...

} finally {

this.lock.writeLock().unlock();

}

}4.2. readLock

4.2.读锁

And now it’s time to add a readLock call to the get method:

现在是时候给get方法添加一个readLock调用了。

public Optional<V> get(K key) {

this.lock.readLock().lock();

try {

//...

} finally {

this.lock.readLock().unlock();

}

}

It seems exactly what we have done with the put method. The only difference is that we use a readLock instead of writeLock. So, this distinction between the read and write locks allows us to read the cache in parallel while it’s not being updated.

这似乎与我们在put方法中所做的完全一样。唯一的区别是,我们使用了一个readLock而不是writeLock。所以,这种读锁和写锁的区别使得我们可以在缓存没有被更新的情况下并行地读取缓存。

Now, let’s test our cache in a concurrent environment:

现在,让我们在一个并发的环境中测试我们的缓存。

@Test

public void runMultiThreadTask_WhenPutDataInConcurrentToCache_ThenNoDataLost() throws Exception {

final int size = 50;

final ExecutorService executorService = Executors.newFixedThreadPool(5);

Cache<Integer, String> cache = new LRUCache<>(size);

CountDownLatch countDownLatch = new CountDownLatch(size);

try {

IntStream.range(0, size).<Runnable>mapToObj(key -> () -> {

cache.put(key, "value" + key);

countDownLatch.countDown();

}).forEach(executorService::submit);

countDownLatch.await();

} finally {

executorService.shutdown();

}

assertEquals(cache.size(), size);

IntStream.range(0, size).forEach(i -> assertEquals("value" + i,cache.get(i).get()));

}5. Conclusion

5.总结

In this tutorial, we learned what exactly an LRU cache is, including some of its most common features. Then, we saw one way to implement an LRU cache in Java and explored some of the most common operations.

在本教程中,我们了解到LRU缓存到底是什么,包括它的一些最常见的特征。 然后,我们看到了一种在Java中实现LRU缓存的方法,并探索了一些最常见的操作。

Finally, we covered concurrency in action using the lock mechanism.

最后,我们介绍了使用锁机制的并发性行动。

As usual, all the examples used in this article are available over on GitHub.

像往常一样,本文中使用的所有例子都可以在GitHub上找到。