1. Overview

1.概述

In this tutorial, we’ll venture into the realm of data modeling for event-driven architecture using Apache Kafka.

在本教程中,我们将冒险进入使用Apache Kafka为事件驱动架构建立数据模型的领域。

2. Setup

2.设置

A Kafka cluster consists of multiple Kafka brokers that are registered with a Zookeeper cluster. To keep things simple, we’ll use ready-made Docker images and docker-compose configurations published by Confluent.

一个Kafka集群由多个在Zookeeper集群中注册的Kafka代理机组成。为了保持简单,我们将使用现成的Docker镜像和docker-compose配置由Confluent发布。

First, let’s download the docker-compose.yml for a 3-node Kafka cluster:

首先,让我们下载一个3节点Kafka集群的docker-compose.yml。

$ BASE_URL="https://raw.githubusercontent.com/confluentinc/cp-docker-images/5.3.3-post/examples/kafka-cluster"

$ curl -Os "$BASE_URL"/docker-compose.ymlNext, let’s spin-up the Zookeeper and Kafka broker nodes:

接下来,我们来启动Zookeeper和Kafka代理节点。

$ docker-compose up -dFinally, we can verify that all the Kafka brokers are up:

最后,我们可以验证所有的Kafka经纪商是否已经启动。

$ docker-compose logs kafka-1 kafka-2 kafka-3 | grep started

kafka-1_1 | [2020-12-27 10:15:03,783] INFO [KafkaServer id=1] started (kafka.server.KafkaServer)

kafka-2_1 | [2020-12-27 10:15:04,134] INFO [KafkaServer id=2] started (kafka.server.KafkaServer)

kafka-3_1 | [2020-12-27 10:15:03,853] INFO [KafkaServer id=3] started (kafka.server.KafkaServer)3. Event Basics

3.活动基础知识

Before we take up the task of data modeling for event-driven systems, we need to understand a few concepts such as events, event-stream, producer-consumer, and topic.

在我们开始为事件驱动系统进行数据建模之前,我们需要了解一些概念,如事件、事件流、生产者-消费者和主题。

3.1. Event

3.1.事件

An event in the Kafka-world is an information log of something that happened in the domain-world. It does it by recording the information as a key-value pair message along with few other attributes such as the timestamp, meta information, and headers.

Kafka世界中的事件是域世界中发生的事情的信息记录。它通过将信息记录为键值对消息,以及其他一些属性,如时间戳、元信息和头文件。

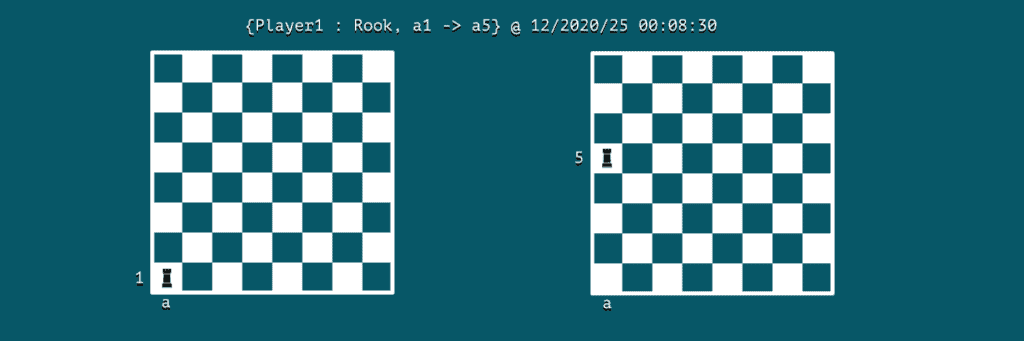

Let’s assume that we’re modeling a game of chess; then an event could be a move:

让我们假设我们在为一盘棋建模;那么,一个事件可以是一个步骤。

We can notice that event holds the key information of the actor, action, and time of its occurrence. In this case, Player1 is the actor, and the action is moving of rook from cell a1 to a5 at 12/2020/25 00:08:30.

我们可以注意到,事件持有行为者、行动和发生时间的关键信息。在本例中,Player1是行为者,行动是在12/2020/25 00:08:30将车从a1移到a5。

3.2. Message Stream

3.2 信息流

Apache Kafka is a stream processing system that captures events as a message stream. In our game of chess, we can think of the event stream as a log of moves played by the players.

Apache Kafka是一个流处理系统,它将事件捕捉为消息流。在我们的国际象棋游戏中,我们可以把事件流看作是棋手们下棋的记录。

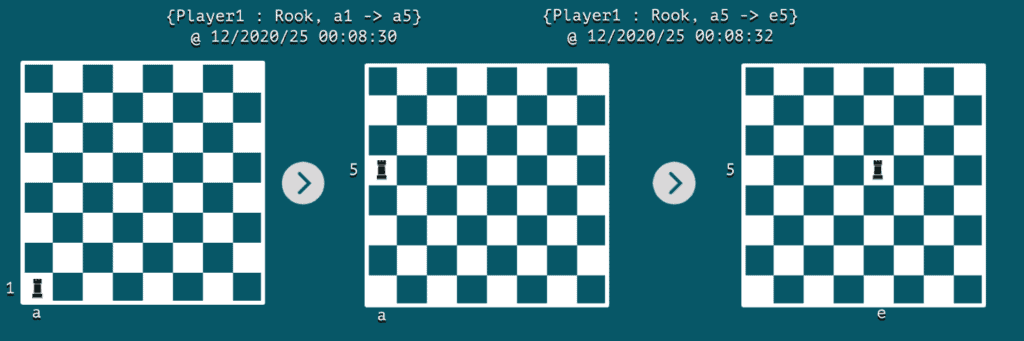

At the occurrence of each event, a snapshot of the board would represent its state. It’s usually common to store the latest static state of an object using a traditional table schema.

在每个事件发生时,棋盘的快照将代表其状态。通常情况下,使用传统的表模式来存储一个对象的最新静态状态是很常见的。

On the other hand, the event stream can help us capture the dynamic change between two consecutive states in the form of events. If we play a series of these immutable events, we can transition from one state to another. Such is the relationship between an event stream and a traditional table, often known as stream table duality.

另一方面,事件流可以帮助我们以事件的形式捕捉两个连续状态之间的动态变化。如果我们播放一系列这些不可改变的事件,我们就可以从一个状态过渡到另一个状态。这就是事件流和传统表格之间的关系,通常被称为流表二重性。

Let’s visualize an event stream on the chessboard with just two consecutive events:

让我们想象一下棋盘上的事件流,只有两个连续的事件。

4. Topics

4.主题

In this section, we’ll learn how to categorize messages routed through Apache Kafka.

在本节中,我们将学习如何对通过Apache Kafka路由的消息进行分类。

4.1. Categorization

4.1.归类

In a messaging system such as Apache Kafka, anything that produces the event is commonly called a producer. While the ones reading and consuming those messages are called consumers.

在Apache Kafka这样的消息传递系统中,任何产生事件的东西通常被称为生产者。而阅读和消费这些消息的,则被称为消费者。

In a real-world scenario, each producer can generate events of different types, so it’d be a lot of wasted effort by the consumers if we expect them to filter the messages relevant to them and ignore the rest.

在现实世界中,每个生产者都可以产生不同类型的事件,所以如果我们期望消费者过滤与他们相关的信息而忽略其他的信息,那就会浪费很多精力了。

To solve this basic problem, Apache Kafka uses topics that are essentially groups of messages that belong together. As a result, consumers can be more productive while consuming the event messages.

为了解决这个基本问题,Apache Kafka使用了主题,这些主题本质上是属于一起的消息组。因此,消费者在消费事件消息时可以提高工作效率。

In our chessboard example, a topic could be used to group all the moves under the chess-moves topic:

在我们的棋盘例子中,可以用一个主题将所有的棋子归入chess-moves主题。

$ docker run \

--net=host --rm confluentinc/cp-kafka:5.0.0 \

kafka-topics --create --topic chess-moves \

--if-not-exists \

--partitions 1 --replication-factor 1 \

--zookeeper localhost:32181

Created topic "chess-moves".4.2. Producer-Consumer

4.2.生产者-消费者

Now, let’s see how producers and consumers use Kafka’s topics for message processing. We’ll use kafka-console-producer and kafka-console-consumer utilities shipped with Kafka distribution to demonstrate this.

现在,让我们看看生产者和消费者如何使用Kafka的主题进行消息处理。我们将使用kafka-console-producer和kafka-console-consumer随Kafka发行版提供的工具来演示。

Let’s spin up a container named kafka-producer wherein we’ll invoke the producer utility:

让我们建立一个名为kafka-producer的容器,在其中我们将调用producer工具。

$ docker run \

--net=host \

--name=kafka-producer \

-it --rm \

confluentinc/cp-kafka:5.0.0 /bin/bash

# kafka-console-producer --broker-list localhost:19092,localhost:29092,localhost:39092 \

--topic chess-moves \

--property parse.key=true --property key.separator=:

Simultaneously, we can spin up a container named kafka-consumer wherein we’ll invoke the consumer utility:

同时,我们可以启动一个名为kafka-consumer的容器,在这里我们将调用消费者工具。

$ docker run \

--net=host \

--name=kafka-consumer \

-it --rm \

confluentinc/cp-kafka:5.0.0 /bin/bash

# kafka-console-consumer --bootstrap-server localhost:19092,localhost:29092,localhost:39092 \

--topic chess-moves --from-beginning \

--property print.key=true --property print.value=true --property key.separator=:

Now, let’s record some game moves through the producer:

现在,让我们通过制作人记录一些游戏动作。

>{Player1 : Rook, a1->a5}As the consumer is active, it’ll pick up this message with key as Player1:

由于消费者是活跃的,它将接收到这个键为Player1的消息。

{Player1 : Rook, a1->a5}5. Partitions

5.分数

Next, let’s see how we can create further categorization of messages using partitions and boost the performance of the entire system.

接下来,让我们看看如何利用分区对信息进行进一步分类,并提升整个系统的性能。

5.1. Concurrency

5.1.并发

We can divide a topic into multiple partitions and invoke multiple consumers to consume messages from different partitions. By enabling such concurrency behavior, the overall performance of the system can thereby be improved.

我们可以将一个主题划分为多个分区,并调用多个消费者来消费来自不同分区的消息。通过启用这种并发行为,系统的整体性能可以因此得到改善。

By default, Kafka versions that support –bootstrap-server option during the creation of a topic would create a single partition of a topic unless explicitly specified at the time of topic creation. However, for a pre-existing topic, we can increase the number of partitions. Let’s set partition number to 3 for the chess-moves topic:

默认情况下,在创建主题时支持-bootstrap-server选项的Kafka版本将创建一个主题的单个分区,除非在创建主题时明确指定。然而,对于一个预先存在的主题,我们可以增加分区的数量。让我们为chess-moves主题设置分区数量为3。

$ docker run \

--net=host \

--rm confluentinc/cp-kafka:5.0.0 \

bash -c "kafka-topics --alter --zookeeper localhost:32181 --topic chess-moves --partitions 3"

WARNING: If partitions are increased for a topic that has a key, the partition logic or ordering of the messages will be affected

Adding partitions succeeded!5.2. Partition Key

5.2.分区钥匙

Within a topic, Kafka processes messages across multiple partitions using a partition key. At one end, producers use it implicitly to route a message to one of the partitions. On the other end, each consumer can read messages from a specific partition.

在一个主题中,Kafka使用一个分区键在多个分区中处理消息。在一端,生产者使用它隐含地将消息路由到其中一个分区。在另一端,每个消费者可以从一个特定的分区读取消息。

By default, the producer would generate a hash value of the key followed by a modulus with the number of partitions. Then, it’d send the message to the partition identified by the calculated identifier.

默认情况下,生产者将生成一个密钥的哈希值,然后是一个带有分区数量的模数。然后,它将把消息发送到由计算出的标识符确定的分区。

Let’s create new event messages with the kafka-console-producer utility, but this time we’ll record moves by both the players:

让我们用kafka-console-producer工具创建新的事件消息,但这一次我们将记录两个球员的动作。

# kafka-console-producer --broker-list localhost:19092,localhost:29092,localhost:39092 \

--topic chess-moves \

--property parse.key=true --property key.separator=:

>{Player1: Rook, a1 -> a5}

>{Player2: Bishop, g3 -> h4}

>{Player1: Rook, a5 -> e5}

>{Player2: Bishop, h4 -> g3}Now, we can have two consumers, one reading from partition-1 and the other reading from partition-2:

现在,我们可以有两个消费者,一个从分区1读取,另一个从分区2读取。

# kafka-console-consumer --bootstrap-server localhost:19092,localhost:29092,localhost:39092 \

--topic chess-moves --from-beginning \

--property print.key=true --property print.value=true \

--property key.separator=: \

--partition 1

{Player2: Bishop, g3 -> h4}

{Player2: Bishop, h4 -> g3}We can see that all moves by Player2 are being recorded into partition-1. In the same manner, we can check that moves by Player1 are being recorded into partition-0.

我们可以看到,玩家2的所有棋步都被记录在分区1中。以同样的方式,我们可以检查玩家1的棋步是否被记录在分区-0中。

6. Scaling

6.缩放

How we conceptualize topics and partitions is crucial to horizontal scaling. On the one hand, a topic is more of a pre-defined categorization of data. On the other hand, a partition is a dynamic categorization of data that happens on the fly.

我们如何对主题和分区进行概念化,对横向扩展至关重要。一方面,主题更像是对数据的预定义分类。另一方面,分区是对数据的动态分类,它是在动态中发生的。

Further, there’re practical limits on how many partitions we can configure within a topic. That’s because each partition is mapped to a directory in the file system of the broker node. When we increase the number of partitions, we also increase the number of open file handles on our operating system.

此外,我们在一个主题中能配置多少个分区是有实际限制的。这是因为每个分区都被映射到经纪人节点的文件系统中的一个目录。当我们增加分区的数量时,我们也会增加我们操作系统上的开放文件句柄的数量。

As a rule of thumb, experts at Confluent recommend limiting the number of partitions per broker to 100 x b x r, where b is the number of brokers in a Kafka cluster, and r is the replication factor.

作为一条经验法则,Confluent 的专家建议将每个经纪人的分区数量限制在100 x b x r,其中b是Kafka集群中的经纪人数量,而r是复制因子。

7. Conclusion

7.结语

In this article, we used a Docker environment to cover the fundamentals of data modeling for a system that uses Apache Kafka for message processing. With a basic understanding of events, topics, and partitions, we’re now ready to conceptualize event streaming and further use this architecture paradigm.

在这篇文章中,我们使用Docker环境来介绍一个使用Apache Kafka进行消息处理的系统的数据建模的基本原理。有了对事件、主题和分区的基本了解,我们现在已经准备好将事件流概念化,并进一步使用这种架构范式。