1. Overview

1.概述

In this article, we’ll learn about how Apache Cassandra partitions and distributes the data among nodes in a cluster. Additionally, we’ll see how Cassandra stores the replicated data in multiple nodes to achieve high availability.

在本文中,我们将了解Apache Cassandra如何在集群中的节点之间划分和分配数据。此外,我们将看到Cassandra如何在多个节点中存储复制的数据以实现高可用性。

2. Node

2.节点

In Cassandra, a single node runs on a server or virtual machine (VM). Cassandra is written in the Java language, which means a running instance of Cassandra is a Java Virtual Machine (JVM) process. A Cassandra node can live in the cloud or in an on-premise data center or in any disk. For data storage, per recommendation, we should use local storage or direct-attached storage but not SAN.

在Cassandra中,单个节点在服务器或虚拟机(VM)上运行。Cassandra是用Java语言编写的,这意味着Cassandra的运行实例是一个Java虚拟机(JVM)进程。一个Cassandra节点可以生活在云端,也可以生活在企业内部的数据中心或任何磁盘中。对于数据存储,根据建议,我们应该使用本地存储或直接连接的存储,但不是SAN。

A Cassandra node is responsible for all the data it stores in the form of a distributed hashtable. Cassandra provides a tool called nodetool to manage and check the status of a node or a cluster.

一个Cassandra节点负责它以分布式哈希表的形式存储的所有数据。Cassandra提供了一个叫做nodetool的工具来管理和检查一个节点或一个集群的状态。

3. Token Ring

3.令牌环

A Cassandra maps each node in a cluster to one or more tokens on a continuous ring form. By default, a token is a 64-bit integer. Therefore, the possible range for tokens is from -263 to 263-1. It uses a consistent hashing technique to map nodes to one or more tokens.

A Cassandra将集群中的每个节点映射为连续环形上的一个或多个令牌。默认情况下,一个令牌是一个64位的整数。因此,令牌的可能范围是-263到263-1。它使用一致的散列技术,将节点映射到一个或多个令牌。

3.1. Single Token per Node

3.1.每个节点的单一令牌

With a single token per node case, each node is responsible for a token range of values less than or equal to the assigned token and greater than the assigned token of the previous node. To complete the ring, the first node with the lowest token value is responsible for the range of values less than or equal to the assigned token and greater than the assigned token of the last node with the highest token value.

在每个节点只有一个令牌的情况下,每个节点负责的令牌值范围小于或等于分配的令牌,并大于前一个节点的分配令牌。为了完成这个环,具有最低令牌值的第一个节点负责小于或等于分配的令牌和大于具有最高令牌值的最后一个节点的分配令牌的值范围。

On the data write, Cassandra uses a hash function to calculate the token value from the partition key. This token value is compared with the token range for each node to identify the node the data belongs to.

在数据写入时,Cassandra使用一个哈希函数来计算分区密钥的令牌值。这个令牌值与每个节点的令牌范围进行比较,以确定数据所属的节点。

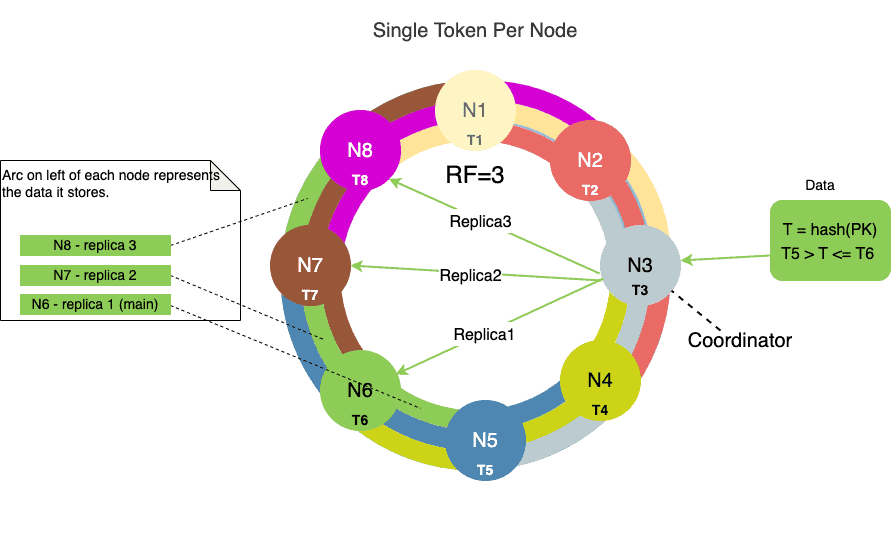

Let’s look at an example. The following diagram shows an eight-node cluster with a replication factor (RF) of 3 and a single token assigned to each node:

让我们看一个例子。下图显示了一个8个节点的集群,复制因子(RF)为3,每个节点分配一个令牌。

A cluster with RF=3 means each node has three replicas.

一个RF=3的集群意味着每个节点有三个副本。

The innermost ring in the above diagram represents the main data token range.

上图中最里面的一环代表了主要的数据令牌范围。

The drawback with a single token per node is the imbalance of tokens created when nodes are either added to or removed from the cluster.

每个节点的单一令牌的缺点是,当节点被加入或从集群中移除时,会产生令牌的不平衡。

Assume that, in a hash ring of N nodes, each node owns an equal number of tokens, say 100. Further, assume there’s an existing node X that owns token ranges 100 to 200. Now, if we add a new node Y to the left of node X, then this new node Y now owns half of the tokens from node X.

假设在一个由N个节点组成的哈希环中,每个节点拥有相等数量的代币,比如说100个。此外,假设有一个现有的节点X拥有100到200的代币范围。现在,如果我们在节点X的左边添加一个新的节点Y,那么这个新节点Y现在拥有节点X的一半代币。

That is, now node X will own a token range of 100 – 150 and node Y will own a token range of 151 – 200. Some of the data from node X has to be moved to node Y. This results in data movement from one node X to another node Y.

也就是说,现在节点X将拥有100-150的令牌范围,节点Y将拥有151-200的令牌范围。节点X的一些数据必须转移到节点Y,这导致数据从一个节点X移动到另一个节点Y。

3.2. Multiple Tokens per Node (vnodes)

3.2 每个节点的多个令牌(vnodes)

Since Cassandra 2.0, virtual nodes (vnodes) have been enabled by default. In this case, Cassandra breaks up the token range into smaller ranges and assigns multiple of those smaller ranges to each node in the cluster. By default, the number of tokens for a node is 256 – this is set in the num_tokens property in the cassandra.yaml file – which means that there are 256 vnodes within a node.

自Cassandra 2.0以来,虚拟节点(vnodes)已被默认启用。在这种情况下,Cassandra将令牌范围分解成更小的范围,并将这些更小的范围中的多个分配给集群中的每个节点。默认情况下,一个节点的令牌数量为256个–这是在cassandra.yaml文件中的num_tokens属性中设置的–这意味着一个节点内有256个vnodes。

This configuration makes it easier to maintain the Cassandra cluster with machines of varying compute resources. This means we can assign more vnodes to the machines with more compute capacity by setting the num_tokens property to a larger value. On the other hand, for machines with less compute capacity, we can set num_tokens to a lower number.

这种配置使我们更容易用不同的计算资源的机器来维护Cassandra集群。这意味着我们可以通过将num_tokens 属性设置为较大的值,将更多的vnodes分配给计算能力较强的机器。另一方面,对于计算能力较弱的机器,我们可以将num_tokens设置为一个较低的数字。

When using vnodes, we have to pre-calculate the token values. Otherwise, we have to pre-calculate the token value for each node and set it to the value of the num_tokens property.

当使用vnodes时,我们必须预先计算出令牌值。否则,我们必须预先计算每个节点的令牌值,并将其设置为num_tokens属性的值。

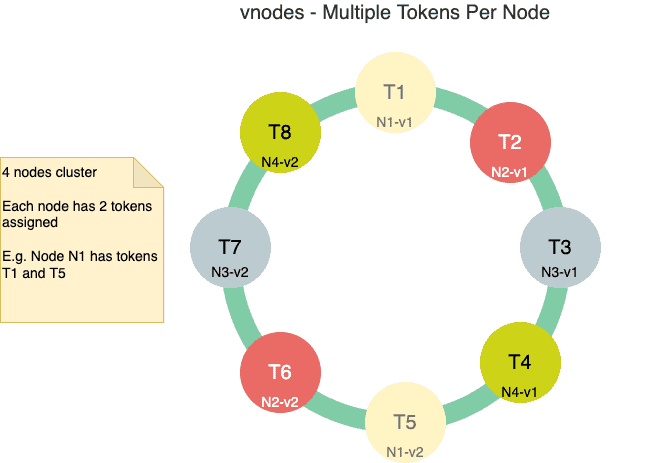

Below is a diagram showing a four-node cluster with two tokens assigned to each node:

下图显示了一个四节点的集群,每个节点分配了两个令牌。

The advantage of this setup is that when a new node is added or an existing node is removed, then the redistribution of the data happens to/from multiple nodes.

这种设置的好处是,当一个新的节点被添加或一个现有的节点被移除时,那么数据的重新分配会发生在/从多个节点。

When we add a new node in the hash ring, that node will now own multiple tokens. These token ranges were previously owned by multiple nodes, so that data movement post-addition is from multiple nodes.

当我们在哈希环中添加一个新节点时,该节点现在将拥有多个令牌。这些令牌范围以前是由多个节点拥有的,因此,添加后的数据移动是来自多个节点。

4. Partitioners

4.分隔器

The partitioner determines how data is distributed across the nodes in a Cassandra cluster. Basically, a partitioner is a hash function to determine the token value by hashing the partition key of a row’s data. Then, this partition key token is used to determine and distribute the row data within the ring.

分区器决定了数据如何在Cassandra集群中的各节点之间分配。基本上,分区器是一个散列函数,通过散列行数据的分区键来确定令牌值。然后,这个分区密钥令牌被用来确定和分配环内的行数据。

Cassandra provides different partitioners that use different algorithms to calculate the hash value of the partition key. We can provide and configure our own partitioner by implementing the IPartitioner interface.

Cassandra提供了不同的分区器,使用不同的算法来计算分区密钥的哈希值。我们可以通过实现IPartitioner接口来提供和配置我们自己的分区器。

The Murmur3Partitioner is the default partitioner since Cassandra version 1.2. It uses the MurmurHash function that creates a 64-bit hash of the partition key.

Murmur3Partitioner 是 Cassandra 1.2 版以来的默认分区器。它使用MurmurHash函数来创建分区密钥的64位哈希值。

Prior to Murmur3Partitioner, Cassandra had RandomPartitioner as default. It uses the MD5 algorithm to hash partition keys.

在Murmur3Partitioner之前,Cassandra有RandomPartitioner作为默认。它使用MD5算法来哈希分区键。

Both Murmur3Partitioner and RandomPartitioner use tokens to evenly distribute the data throughout the ring. However, the main difference between the two partitioners is RandomPartitioner uses a cryptographic hash function and Murmur3Partitioner uses non-cryptographic hash functions. Generally, the cryptographic hash function is non-performant and takes a longer time.

Murmur3Partitioner和RandomPartitioner都使用令牌来在整个环上均匀地分配数据。然而,这两个分区器的主要区别是RandomPartitioner 使用加密哈希函数,Murmur3Partitioner 使用非加密哈希函数。一般来说,加密哈希函数是非性能化的,需要更长的时间。

5. Replication Strategies

5.复制策略

Cassandra achieves high availability and fault tolerance by replication of the data across nodes in a cluster. The replication strategy determines where replicas are stored in the cluster.

Cassandra通过在集群中的各节点上复制数据来实现高可用性和容错性。复制策略决定了集群中复制的存储位置。

Each node in the cluster owns not only the data within an assigned token range but also the replica for a different range of data. If the main node goes down, then this replica node can respond to the queries for that range of data.

集群中的每个节点不仅拥有指定令牌范围内的数据,而且还拥有不同范围数据的副本。如果主节点发生故障,那么这个副本节点可以响应该范围内的数据查询。

Cassandra asynchronously replicates data in the background. And the replication factor (RF) is the number that determines how many nodes get the copy of the same data in the cluster. For example, three nodes in the ring will have copies of the same data with RF=3. We have already seen the data replication shown in the diagram in the Token Ring section.

Cassandra在后台异步复制数据。而复制因子(RF)是决定集群中多少个节点获得相同数据副本的数字。例如,环中的三个节点在RF=3时将有相同数据的副本。我们已经看到了令牌环部分的图中所示的数据复制。

Cassandra provides pluggable replication strategies by allowing different implementations of the AbstractReplicationStrategy class. Out of the box, Cassandra provides a couple of implementations, SimpleStrategy and NetworkTopologyStrategy.

Cassandra通过允许对AbstractReplicationStrategy类的不同实现来提供可插拔的复制策略。开箱即用,Cassandra提供了几个实现:SimpleStrategy和NetworkTopologyStrategy。

Once the partitioner calculates the token and places the data in the main node, the SimpleStrategy places the replicas in the consecutive nodes around the ring.

一旦分区器计算出令牌并将数据放入主节点,SimpleStrategy就会将副本放入环形周围的连续节点。

On the other hand, the NetworkTopologyStrategy allows us to specify a different replication factor for each data center. Within a data center, it allocates replicas to nodes in different racks in order to maximize availability.

另一方面,NetworkTopologyStrategy允许我们为每个数据中心指定一个不同的复制因子。在一个数据中心内,它将复制分配给不同机架上的节点,以使可用性最大化。

The NetworkTopologyStrategy is the recommended strategy for keyspaces in production deployments, regardless of whether it’s a single data center or multiple data center deployment.

NetworkTopologyStrategy是生产部署中关键空间的推荐策略,无论它是单数据中心还是多数据中心部署。

The replication strategy is defined independently for each keyspace and is a required option when creating a keyspace.

复制策略是为每个钥匙空间独立定义的,是创建钥匙空间时的一个必要选项。

6. Consistency Level

6.一致性水平

Consistency means we’re reading the same data that we just wrote in a distributed system. Cassandra provides tuneable consistency levels on both read and write queries. In other words, it gives us a fine-grained trade-off between availability and consistency.

一致性意味着我们在分布式系统中读取的数据与我们刚刚写入的数据相同。Cassandra 在读和写查询中都提供了可调整的一致性级别。换句话说,它为我们提供了在可用性和一致性之间的细微权衡。

A higher level of consistency means more nodes need to respond to read or write queries. This way, more often than not, Cassandra reads the same data that was written a moment ago.

更高的一致性意味着更多的节点需要响应读或写查询。这样一来,更多的时候,Cassandra读取的是刚才写下的同样的数据。

For read queries, the consistency level specifies how many replicas need to respond before returning the data to the client. For write queries, the consistency level specifies how many replicas need to acknowledge the write before sending a successful message to the client.

对于读查询,一致性级别指定了在将数据返回给客户端之前需要多少个复制体做出响应。对于写查询,一致性级别指定了在向客户端发送成功的消息之前需要多少个副本确认写。

Since Cassandra is an eventually consistent system, the RF makes sure that the write operation happens to the remaining nodes asynchronously in the background.

由于Cassandra是一个最终一致的系统,RF确保写操作在后台异步发生在其余节点上。

7. Snitch

7.告密者

The snitch provides information about the network topology so that Cassandra can route the read/write request efficiently. The snitch determines which node belongs to which data center and rack. It also determines the relative host proximity of the nodes in a cluster.

snitch提供有关网络拓扑结构的信息,以便Cassandra能够有效地路由读/写请求。缝隙决定了哪个节点属于哪个数据中心和机架。它还可以确定集群中各节点的相对主机距离。

The replication strategies use this information to place the replicas into appropriate nodes in clusters within a single data center or multiple data centers.

复制策略使用这些信息将副本放置在单个数据中心或多个数据中心的集群中的适当节点中。

8. Conclusion

8.结语

In this article, we learned about general concepts like nodes, rings, and tokens in Cassandra. On top of these, we learned how Cassandra partitions and replicates the data among the nodes in a cluster. These concepts describe how Cassandra uses different strategies for efficient writing and reading of the data.

在这篇文章中,我们了解了Cassandra中的节点、环和令牌等一般概念。在此基础上,我们了解了Cassandra是如何在集群中的节点间进行数据分割和复制的。这些概念描述了Cassandra如何使用不同的策略来有效地写入和读取数据。

These are a few architecture components that make Cassandra highly scalable, available, durable, and manageable.

这些是使Cassandra具有高度可扩展性、可用性、持久性和可管理性的几个架构组件。