1. Introduction

1.介绍

Imagine we had to manually complete tasks like processing payslips, calculating interest, and generating bills. It would become quite boring, error-prone and a never-ending list of manual tasks!

想象一下,我们不得不手动完成处理工资单、计算利息和生成账单等任务。这将变得相当枯燥,容易出错,而且是一个永无止境的手工任务清单

In this tutorial, we’ll take a look at Java Batch Processing (JSR 352), a part of the Jakarta EE platform, and a great specification for automating tasks like these. It offers application developers a model for developing robust batch processing systems so that they can focus on the business logic.

在本教程中,我们将了解 Java 批处理(JSR 352),它是 Jakarta EE 平台的一部分,是实现类似任务自动化的绝佳规范。它为应用程序开发人员提供了一个开发强大的批处理系统的模型,从而使他们能够专注于业务逻辑。

2. Maven Dependencies

2.Maven的依赖性

Since JSR 352, is just a spec, we’ll need to include its API and implementation, like jberet:

由于JSR 352,只是一个规范,我们需要包括其API和实现,比如jberet。

<dependency>

<groupId>javax.batch</groupId>

<artifactId>javax.batch-api</artifactId>

<version>1.0.1</version>

</dependency>

<dependency>

<groupId>org.jberet</groupId>

<artifactId>jberet-core</artifactId>

<version>1.0.2.Final</version>

</dependency>

<dependency>

<groupId>org.jberet</groupId>

<artifactId>jberet-support</artifactId>

<version>1.0.2.Final</version>

</dependency>

<dependency>

<groupId>org.jberet</groupId>

<artifactId>jberet-se</artifactId>

<version>1.0.2.Final</version>

</dependency>We’ll also add an in-memory database so we can look at some more realistic scenarios.

我们还将添加一个内存数据库,这样我们就可以看看一些更真实的场景。

3. Key Concepts

3.关键概念

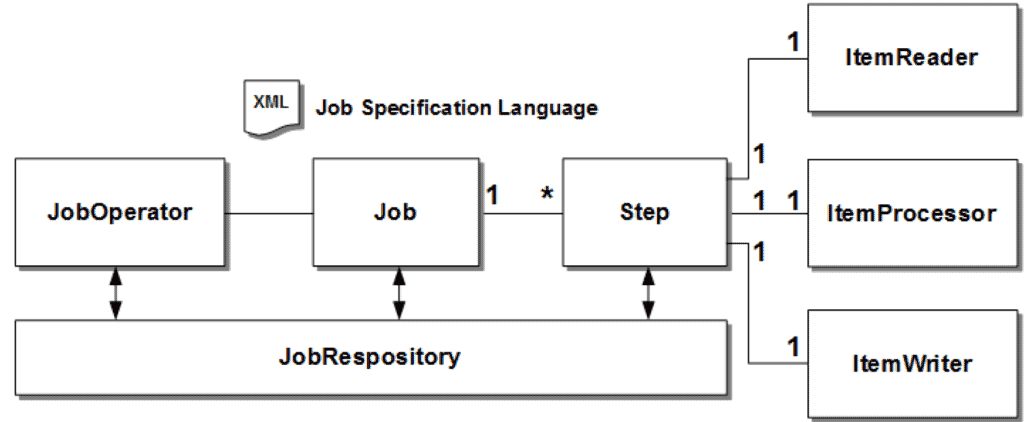

JSR 352 introduces a few concepts, which we can look at this way:

JSR 352引入了一些概念,我们可以这样来看待这些概念。

Let’s first define each piece:

让我们首先定义每一块。

- Starting on the left, we have the JobOperator. It manages all aspects of job processing such as starting, stopping, and restarting

- Next, we have the Job. A job is a logical collection of steps; it encapsulates an entire batch process

- A job will contain between 1 and n Steps. Each step is an independent, sequential unit of work. A step is composed of reading input, processing that input, and writing output

- And last, but not least, we have the JobRepository which stores the running information of the jobs. It helps to keep track of jobs, their state, and their completion results

Steps have a bit more detail than this, so let’s take a look at that next. First, we’ll look at Chunk steps and then at Batchlets.

步骤比这更详细一些,所以接下来让我们看一下。首先,我们看看Chunk步骤,然后看看Batchlets。

4. Creating a Chunk

4.创建一个大块

As stated earlier, a chunk is a kind of step. We’ll often use a chunk to express an operation that is performed over and over, say over a set of items. It’s kind of like intermediate operations from Java Streams.

如前所述,块是一种步骤。我们经常使用块来表达一个反复执行的操作,例如对一组项目。这有点像Java Streams中的中间操作。

When describing a chunk, we need to express where to take items from, how to process them, and where to send them afterward.

在描述一个大块时,我们需要表达从哪里取物,如何处理它们,以及之后将它们发送到哪里。

4.1. Reading Items

4.1.阅读项目

To read items, we’ll need to implement ItemReader.

为了读取项目,我们需要实现ItemReader。。

In this case, we’ll create a reader that will simply emit the numbers 1 through 10:

在这种情况下,我们将创建一个阅读器,它将简单地发出数字1到10。

@Named

public class SimpleChunkItemReader extends AbstractItemReader {

private Integer[] tokens;

private Integer count;

@Inject

JobContext jobContext;

@Override

public Integer readItem() throws Exception {

if (count >= tokens.length) {

return null;

}

jobContext.setTransientUserData(count);

return tokens[count++];

}

@Override

public void open(Serializable checkpoint) throws Exception {

tokens = new Integer[] { 1,2,3,4,5,6,7,8,9,10 };

count = 0;

}

}Now, we’re just reading from the class’s internal state here. But, of course, readItem could pull from a database, from the file system, or some other external source.

现在,我们在这里只是从类的内部状态读取。但是,当然,readItem可以从数据库、文件系统或其他外部资源中获取。

Note that we are saving some of this internal state using JobContext#setTransientUserData() which will come in handy later on.

请注意,我们正在使用JobContext#setTransientUserData()保存一些内部状态,这在以后会很方便。

Also, note the checkpoint parameter. We’ll pick that up again, too.

另外,注意检查点参数。我们也会再次拿起这个。

4.2. Processing Items

4.2.处理项目

Of course, the reason we are chunking is that we want to perform some kind of operation on our items!

当然,我们进行分块的原因是,我们想对我们的项目进行某种操作。

Any time we return null from an item processor, we drop that item from the batch.

任何时候我们从一个项目处理器返回null ,我们就从批处理中放弃这个项目。

So, let’s say here that we want to keep only the even numbers. We can use an ItemProcessor that rejects the odd ones by returning null:

所以,我们在这里说,我们只想保留偶数的数字。我们可以使用一个ItemProcessor,通过返回null来拒绝奇数。

@Named

public class SimpleChunkItemProcessor implements ItemProcessor {

@Override

public Integer processItem(Object t) {

Integer item = (Integer) t;

return item % 2 == 0 ? item : null;

}

}processItem will get called once for each item that our ItemReader emits.

processItem将为我们的ItemReader发出的每个项目被调用一次。

4.3. Writing Items

4.3.写作项目

Finally, the job will invoke the ItemWriter so we can write our transformed items:

最后,工作将调用ItemWriter,这样我们就可以写出我们的转换项目。

@Named

public class SimpleChunkWriter extends AbstractItemWriter {

List<Integer> processed = new ArrayList<>();

@Override

public void writeItems(List<Object> items) throws Exception {

items.stream().map(Integer.class::cast).forEach(processed::add);

}

}

How long is items? In a moment, we’ll define a chunk’s size, which will determine the size of the list that is sent to writeItems.

items有多长?稍后,我们将定义一个chunk的大小,它将决定发送到writeItems的列表的大小。

4.4. Defining a Chunk in a Job

4.4.在作业中定义一个分块

Now we put all this together in an XML file using JSL or Job Specification Language. Note that we’ll list our reader, processor, chunker, and also a chunk size:

现在我们用JSL或作业规范语言把所有这些放在一个XML文件中。注意,我们将列出我们的阅读器、处理器、分块器,以及分块大小。

<job id="simpleChunk">

<step id="firstChunkStep" >

<chunk item-count="3">

<reader ref="simpleChunkItemReader"/>

<processor ref="simpleChunkItemProcessor"/>

<writer ref="simpleChunkWriter"/>

</chunk>

</step>

</job>The chunk size is how often progress in the chunk is committed to the job repository, which is important to guarantee completion, should part of the system fail.

分块大小是指分块中的进度被提交到作业库的频率,这对保证完成作业很重要,如果系统的一部分出现故障。

We’ll need to place this file in META-INF/batch-jobs for .jar files and in WEB-INF/classes/META-INF/batch-jobs for .war files.

我们将需要把这个文件放在META-INF/batch-jobs中,用于.jar文件,并放在WEB-INF/classes/META-INF/batch-jobs中,用于.war文件。

We gave our job the id “simpleChunk”, so let’s try that in a unit test.

我们给了我们的工作一个id“simpleChunk”,所以让我们在一个单元测试中试试吧。

Now, jobs are executed asynchronously, which makes them tricky to test. In the sample, make sure to check out our BatchTestHelper which polls and waits until the job is complete:

现在,作业是异步执行的,这使得它们的测试很棘手。在样本中,确保检查出我们的BatchTestHelper,它轮询并等待作业完成。

@Test

public void givenChunk_thenBatch_completesWithSuccess() throws Exception {

JobOperator jobOperator = BatchRuntime.getJobOperator();

Long executionId = jobOperator.start("simpleChunk", new Properties());

JobExecution jobExecution = jobOperator.getJobExecution(executionId);

jobExecution = BatchTestHelper.keepTestAlive(jobExecution);

assertEquals(jobExecution.getBatchStatus(), BatchStatus.COMPLETED);

}

So that’s what chunks are. Now, let’s take a look at batchlets.

所以这就是小块(chunks)。现在,让我们来看看batchlets。

5. Creating a Batchlet

5.创建一个Batchlet

Not everything fits neatly into an iterative model. For example, we may have a task that we simply need to invoke once, run to completion, and return an exit status.

并非所有的东西都能整齐地纳入迭代模型。例如,我们可能有一个任务,我们只需要调用一次,运行到完成,然后返回一个退出状态。

The contract for a batchlet is quite simple:

batchlet的合同非常简单。

@Named

public class SimpleBatchLet extends AbstractBatchlet {

@Override

public String process() throws Exception {

return BatchStatus.COMPLETED.toString();

}

}As is the JSL:

JSL也是如此。

<job id="simpleBatchLet">

<step id="firstStep" >

<batchlet ref="simpleBatchLet"/>

</step>

</job>And we can test it using the same approach as before:

而且我们可以使用与之前相同的方法进行测试。

@Test

public void givenBatchlet_thenBatch_completeWithSuccess() throws Exception {

JobOperator jobOperator = BatchRuntime.getJobOperator();

Long executionId = jobOperator.start("simpleBatchLet", new Properties());

JobExecution jobExecution = jobOperator.getJobExecution(executionId);

jobExecution = BatchTestHelper.keepTestAlive(jobExecution);

assertEquals(jobExecution.getBatchStatus(), BatchStatus.COMPLETED);

}So, we’ve looked at a couple of different ways to implement steps.

因此,我们已经看了几个不同的实施步骤的方法。

Now let’s look at mechanisms for marking and guaranteeing progress.

现在让我们来看看标记和保证进展的机制。

6. Custom Checkpoint

6.自定义检查点

Failures are bound to happen in the middle of a job. Should we just start over the whole thing, or can we somehow start where we left off?

在一项工作中,失败是必然会发生的。我们是否应该重新开始整个工作,或者我们可以以某种方式从我们离开的地方开始?

As the name suggests, checkpoints help us to periodically set a bookmark in case of failure.

顾名思义,检查点帮助我们定期设置一个书签,以防出现故障。

By default, the end of chunk processing is a natural checkpoint.

默认情况下,分块处理的结束是一个自然检查点。

However, we can customize it with our own CheckpointAlgorithm:

然而,我们可以用我们自己的CheckpointAlgorithm来定制它。

@Named

public class CustomCheckPoint extends AbstractCheckpointAlgorithm {

@Inject

JobContext jobContext;

@Override

public boolean isReadyToCheckpoint() throws Exception {

int counterRead = (Integer) jobContext.getTransientUserData();

return counterRead % 5 == 0;

}

}Remember the count that we placed in transient data earlier? Here, we can pull it out with JobContext#getTransientUserData to state that we want to commit on every 5th number processed.

还记得我们之前放在暂存数据中的计数吗?在这里,我们可以用JobContext#getTransientUserData把它拉出来,说明我们想在每处理5个数字时提交。

Without this, a commit would happen at the end of each chunk, or in our case, every 3rd number.

如果没有这一点,提交将在每个块的末尾发生,或者在我们的例子中,每隔3个数字就会发生一次。

And then, we match that up with the checkout-algorithm directive in our XML underneath our chunk:

然后,我们将其与我们的块下面的XML中的checkout-algorithm指令相匹配。

<job id="customCheckPoint">

<step id="firstChunkStep" >

<chunk item-count="3" checkpoint-policy="custom">

<reader ref="simpleChunkItemReader"/>

<processor ref="simpleChunkItemProcessor"/>

<writer ref="simpleChunkWriter"/>

<checkpoint-algorithm ref="customCheckPoint"/>

</chunk>

</step>

</job>Let’s test the code, again noting that some of the boilerplate steps are hidden away in BatchTestHelper:

让我们测试一下这段代码,再次注意到一些模板步骤被隐藏在BatchTestHelper中。

@Test

public void givenChunk_whenCustomCheckPoint_thenCommitCountIsThree() throws Exception {

// ... start job and wait for completion

jobOperator.getStepExecutions(executionId)

.stream()

.map(BatchTestHelper::getCommitCount)

.forEach(count -> assertEquals(3L, count.longValue()));

assertEquals(jobExecution.getBatchStatus(), BatchStatus.COMPLETED);

}So, we might be expecting a commit count of 2 since we have ten items and configured the commits to be every 5th item. But, the framework does one more final read commit at the end to ensure everything has been processed, which is what brings us up to 3.

因此,我们可能期望的提交数是2,因为我们有10个项目,并将提交配置为每5个项目。但是,框架会在最后再做一次最后的读取提交,以确保所有的东西都被处理了,这就使我们达到了3。

Next, let’s look at how to handle errors.

接下来,让我们看看如何处理错误。

7. Exception Handling

7.异常处理

By default, the job operator will mark our job as FAILED in case of an exception.

默认情况下,作业操作员会在出现异常时将我们的作业标记为FAILED 。

Let’s change our item reader to make sure that it fails:

让我们改变我们的项目阅读器,以确保它失败。

@Override

public Integer readItem() throws Exception {

if (tokens.hasMoreTokens()) {

String tempTokenize = tokens.nextToken();

throw new RuntimeException();

}

return null;

}And then test:

然后进行测试。

@Test

public void whenChunkError_thenBatch_CompletesWithFailed() throws Exception {

// ... start job and wait for completion

assertEquals(jobExecution.getBatchStatus(), BatchStatus.FAILED);

}But, we can override this default behavior in a number of ways:

但是,我们可以通过多种方式覆盖这一默认行为:。

- skip-limit specifies the number of exceptions this step will ignore before failing

- retry-limit specifies the number of times the job operator should retry the step before failing

- skippable-exception-class specifies a set of exceptions that chunk processing will ignore

So, we can edit our job so that it ignores RuntimeException, as well as a few others, just for illustration:

因此,我们可以编辑我们的工作,使其忽略RuntimeException,以及其他一些,只是为了说明问题。

<job id="simpleErrorSkipChunk" >

<step id="errorStep" >

<chunk checkpoint-policy="item" item-count="3" skip-limit="3" retry-limit="3">

<reader ref="myItemReader"/>

<processor ref="myItemProcessor"/>

<writer ref="myItemWriter"/>

<skippable-exception-classes>

<include class="java.lang.RuntimeException"/>

<include class="java.lang.UnsupportedOperationException"/>

</skippable-exception-classes>

<retryable-exception-classes>

<include class="java.lang.IllegalArgumentException"/>

<include class="java.lang.UnsupportedOperationException"/>

</retryable-exception-classes>

</chunk>

</step>

</job>And now our code will pass:

而现在我们的代码将通过。

@Test

public void givenChunkError_thenErrorSkipped_CompletesWithSuccess() throws Exception {

// ... start job and wait for completion

jobOperator.getStepExecutions(executionId).stream()

.map(BatchTestHelper::getProcessSkipCount)

.forEach(skipCount -> assertEquals(1L, skipCount.longValue()));

assertEquals(jobExecution.getBatchStatus(), BatchStatus.COMPLETED);

}8. Executing Multiple Steps

8.执行多个步骤

We mentioned earlier that a job can have any number of steps, so let’s see that now.

我们之前提到,一项工作可以有任何数量的步骤,所以现在让我们看看。

8.1. Firing the Next Step

8.1.开启下一个步骤

By default, each step is the last step in the job.

默认情况下,每个步骤都是工作的最后一步。

In order to execute the next step within a batch job, we’ll have to explicitly specify by using the next attribute within the step definition:

为了在批处理作业中执行下一个步骤,我们必须在步骤定义中使用next属性来明确指定。

<job id="simpleJobSequence">

<step id="firstChunkStepStep1" next="firstBatchStepStep2">

<chunk item-count="3">

<reader ref="simpleChunkItemReader"/>

<processor ref="simpleChunkItemProcessor"/>

<writer ref="simpleChunkWriter"/>

</chunk>

</step>

<step id="firstBatchStepStep2" >

<batchlet ref="simpleBatchLet"/>

</step>

</job>If we forget this attribute, then the next step in sequence will not get executed.

如果我们忘记了这个属性,那么序列中的下一个步骤将不会被执行。

And we can see what this looks like in the API:

我们可以看到这在API中是什么样子。

@Test

public void givenTwoSteps_thenBatch_CompleteWithSuccess() throws Exception {

// ... start job and wait for completion

assertEquals(2 , jobOperator.getStepExecutions(executionId).size());

assertEquals(jobExecution.getBatchStatus(), BatchStatus.COMPLETED);

}8.2. Flows

8.2 流动性

A sequence of steps can also be encapsulated into a flow. When the flow is finished, it is the entire flow that transitions to the execution element. Also, elements inside the flow can’t transition to elements outside the flow.

一系列的步骤也可以被封装成一个flow。当流程完成时,是整个流程过渡到执行元素。另外,流程内的元素不能过渡到流程外的元素。

We can, say, execute two steps inside a flow, and then have that flow transition to an isolated step:

我们可以,比如说,在一个流程内执行两个步骤,然后让这个流程过渡到一个孤立的步骤。

<job id="flowJobSequence">

<flow id="flow1" next="firstBatchStepStep3">

<step id="firstChunkStepStep1" next="firstBatchStepStep2">

<chunk item-count="3">

<reader ref="simpleChunkItemReader" />

<processor ref="simpleChunkItemProcessor" />

<writer ref="simpleChunkWriter" />

</chunk>

</step>

<step id="firstBatchStepStep2">

<batchlet ref="simpleBatchLet" />

</step>

</flow>

<step id="firstBatchStepStep3">

<batchlet ref="simpleBatchLet" />

</step>

</job>And we can still see each step execution independently:

而且我们仍然可以看到每一步的独立执行。

@Test

public void givenFlow_thenBatch_CompleteWithSuccess() throws Exception {

// ... start job and wait for completion

assertEquals(3, jobOperator.getStepExecutions(executionId).size());

assertEquals(jobExecution.getBatchStatus(), BatchStatus.COMPLETED);

}8.3. Decisions

8.3.决定

We also have if/else support in the form of decisions. Decisions provide a customized way of determining a sequence among steps, flows, and splits.

我们还以决定的形式支持if/else。决定提供一种自定义的方式来确定步骤、流程和分割的顺序。

Like steps, it works on transition elements such as next which can direct or terminate job execution.

像步骤一样,它对过渡元素起作用,如next ,它可以指导或终止作业的执行。

Let’s see how the job can be configured:

让我们看看如何配置这项工作。

<job id="decideJobSequence">

<step id="firstBatchStepStep1" next="firstDecider">

<batchlet ref="simpleBatchLet" />

</step>

<decision id="firstDecider" ref="deciderJobSequence">

<next on="two" to="firstBatchStepStep2"/>

<next on="three" to="firstBatchStepStep3"/>

</decision>

<step id="firstBatchStepStep2">

<batchlet ref="simpleBatchLet" />

</step>

<step id="firstBatchStepStep3">

<batchlet ref="simpleBatchLet" />

</step>

</job>Any decision element needs to be configured with a class that implements Decider. Its job is to return a decision as a String.

任何decision元素都需要配置一个实现Decider的类。它的工作是将一个决定作为String返回。

Each next inside decision is like a case in a switch statement.

decision内的每个next就像switch语句中的case。

8.4. Splits

8.4. 拆分

Splits are handy since they allow us to execute flows concurrently:

Splits很方便,因为它们允许我们同时执行流程。

<job id="splitJobSequence">

<split id="split1" next="splitJobSequenceStep3">

<flow id="flow1">

<step id="splitJobSequenceStep1">

<batchlet ref="simpleBatchLet" />

</step>

</flow>

<flow id="flow2">

<step id="splitJobSequenceStep2">

<batchlet ref="simpleBatchLet" />

</step>

</flow>

</split>

<step id="splitJobSequenceStep3">

<batchlet ref="simpleBatchLet" />

</step>

</job>Of course, this means that the order isn’t guaranteed.

当然,这意味着订单不被保证。

Let’s confirm that they still all get run. The flow steps will be performed in an arbitrary order, but the isolated step will always be last:

让我们确认一下,它们仍然都会被运行。流程步骤将以任意顺序执行,但孤立的步骤将总是最后一个:。

@Test

public void givenSplit_thenBatch_CompletesWithSuccess() throws Exception {

// ... start job and wait for completion

List<StepExecution> stepExecutions = jobOperator.getStepExecutions(executionId);

assertEquals(3, stepExecutions.size());

assertEquals("splitJobSequenceStep3", stepExecutions.get(2).getStepName());

assertEquals(jobExecution.getBatchStatus(), BatchStatus.COMPLETED);

}9. Partitioning a Job

9.分割作业

We can also consume the batch properties within our Java code which have been defined in our job.

我们也可以在我们的Java代码中消费已经在我们的工作中定义的批处理属性。

They can be scoped at three levels – the job, the step, and the batch-artifact.

它们可以在三个层次上进行范围划分–作业、步骤和批处理工件。

Let’s see some examples of how they consumed.

让我们看看他们如何消费的一些例子。

When we want to consume the properties at job level:

当我们想在工作层面上消费这些属性时。

@Inject

JobContext jobContext;

...

jobProperties = jobContext.getProperties();

...This can be consumed at a step level as well:

这也可以在步骤层面上进行消费。

@Inject

StepContext stepContext;

...

stepProperties = stepContext.getProperties();

...When we want to consume the properties at batch-artifact level:

当我们想在批处理工件层面上消费属性时。

@Inject

@BatchProperty(name = "name")

private String nameString;This comes in handy with partitions.

这在分区中很方便。

See, with splits, we can run flows concurrently. But we can also partition a step into n sets of items or set separate inputs, allowing us another way to split up the work across multiple threads.

看,通过分割,我们可以并发地运行流程。但是我们也可以将一个步骤分割成n个项目集或设置单独的输入,让我们以另一种方式在多个线程中分割工作。

To comprehend the segment of work each partition should do, we can combine properties with partitions:

为了理解每个分区应该做的工作段,我们可以将属性与分区结合起来。

<job id="injectSimpleBatchLet">

<properties>

<property name="jobProp1" value="job-value1"/>

</properties>

<step id="firstStep">

<properties>

<property name="stepProp1" value="value1" />

</properties>

<batchlet ref="injectSimpleBatchLet">

<properties>

<property name="name" value="#{partitionPlan['name']}" />

</properties>

</batchlet>

<partition>

<plan partitions="2">

<properties partition="0">

<property name="name" value="firstPartition" />

</properties>

<properties partition="1">

<property name="name" value="secondPartition" />

</properties>

</plan>

</partition>

</step>

</job>10. Stop and Restart

10.停止和重新启动

Now, that’s it for defining jobs. Now let’s talk for a minute about managing them.

现在,对工作的定义就到此为止。现在让我们谈一谈如何管理它们。

We’ve already seen in our unit tests that we can get an instance of JobOperator from BatchRuntime:

我们已经在单元测试中看到,我们可以从BatchRuntime中获得JobOperator的一个实例。

JobOperator jobOperator = BatchRuntime.getJobOperator();And then, we can start the job:

然后,我们就可以开始工作了。

Long executionId = jobOperator.start("simpleBatchlet", new Properties());However, we can also stop the job:

然而,我们也可以停止这项工作。

jobOperator.stop(executionId);And lastly, we can restart the job:

最后,我们可以重新启动工作。

executionId = jobOperator.restart(executionId, new Properties());Let’s see how we can stop a running job:

让我们看看如何停止一个正在运行的工作。

@Test

public void givenBatchLetStarted_whenStopped_thenBatchStopped() throws Exception {

JobOperator jobOperator = BatchRuntime.getJobOperator();

Long executionId = jobOperator.start("simpleBatchLet", new Properties());

JobExecution jobExecution = jobOperator.getJobExecution(executionId);

jobOperator.stop(executionId);

jobExecution = BatchTestHelper.keepTestStopped(jobExecution);

assertEquals(jobExecution.getBatchStatus(), BatchStatus.STOPPED);

}And if a batch is STOPPED, then we can restart it:

而如果一个批次被STOPPED,那么我们可以重新启动它。

@Test

public void givenBatchLetStopped_whenRestarted_thenBatchCompletesSuccess() {

// ... start and stop the job

assertEquals(jobExecution.getBatchStatus(), BatchStatus.STOPPED);

executionId = jobOperator.restart(jobExecution.getExecutionId(), new Properties());

jobExecution = BatchTestHelper.keepTestAlive(jobOperator.getJobExecution(executionId));

assertEquals(jobExecution.getBatchStatus(), BatchStatus.COMPLETED);

}11. Fetching Jobs

11.获取工作

When a batch job is submitted then the batch runtime creates an instance of JobExecution to track it.

当一个批处理作业被提交时,批处理运行时创建一个JobExecution的实例来跟踪它。

To obtain the JobExecution for an execution id, we can use the JobOperator#getJobExecution(executionId) method.

为了获得某个执行ID的JobExecution,我们可以使用JobOperator#getJobExecution(executionId)方法。

And, StepExecution provides helpful information for tracking a step’s execution.

而且,StepExecution为跟踪一个步骤的执行提供有用的信息。

To obtain the StepExecution for an execution id, we can use the JobOperator#getStepExecutions(executionId) method.

为了获得某个执行ID的StepExecution,我们可以使用JobOperator#getStepExecutions(executionId)方法。

And from that, we can get several metrics about the step via StepExecution#getMetrics:

从中,我们可以通过StepExecution#getMetrics获得关于该步骤的几个指标:。

@Test

public void givenChunk_whenJobStarts_thenStepsHaveMetrics() throws Exception {

// ... start job and wait for completion

assertTrue(jobOperator.getJobNames().contains("simpleChunk"));

assertTrue(jobOperator.getParameters(executionId).isEmpty());

StepExecution stepExecution = jobOperator.getStepExecutions(executionId).get(0);

Map<Metric.MetricType, Long> metricTest = BatchTestHelper.getMetricsMap(stepExecution.getMetrics());

assertEquals(10L, metricTest.get(Metric.MetricType.READ_COUNT).longValue());

assertEquals(5L, metricTest.get(Metric.MetricType.FILTER_COUNT).longValue());

assertEquals(4L, metricTest.get(Metric.MetricType.COMMIT_COUNT).longValue());

assertEquals(5L, metricTest.get(Metric.MetricType.WRITE_COUNT).longValue());

// ... and many more!

}12. Disadvantages

12.劣势

JSR 352 is powerful, though it is lacking in a number of areas:

JSR 352是强大的,尽管它在一些方面有所欠缺。

- There seems to be lack of readers and writers which can process other formats such as JSON

- There is no support of generics

- Partitioning only supports a single step

- The API does not offer anything to support scheduling (though J2EE has a separate scheduling module)

- Due to its asynchronous nature, testing can be a challenge

- The API is quite verbose

13. Conclusion

13.结论

In this article, we looked at JSR 352 and learned about chunks, batchlets, splits, flows and much more. Yet, we’ve barely scratched the surface.

在这篇文章中,我们看了JSR 352,了解了chunks、batchlets、splits、flow等内容。然而,我们还仅仅是触及了表面。

As always the demo code can be found over on GitHub.

像往常一样,演示代码可以在GitHub上找到over。