1. Overview

1.概述

In this article, we’ll see the significance of the load factor in Java’s HashMap and how it affects the map’s performance.

在这篇文章中,我们将看到Java的HashMap中负载因子的意义以及它是如何影响地图的性能的。

2. What Is HashMap?

2.什么是HashMap?

The HashMap class belongs to the Java Collection framework and provides a basic implementation of the Map interface. We can use it when we want to store data in terms of key-value pairs. These key-value pairs are called map entries and are represented by the Map.Entry class.

HashMap类属于Java集合框架,提供了Map接口的基本实现。当我们想以键值对的方式存储数据时,我们可以使用它。这些键值对被称为地图条目,由Map.Entry类表示。

3. HashMap Internals

3.HashMap内部程序

Before discussing load factor, let’s review a few terms:

在讨论负载率之前,让我们回顾一下几个术语。

-

- hashing

- capacity

- threshold

- rehashing

- collision

HashMap works on the principle of hashing — an algorithm to map object data to some representative integer value. The hashing function is applied to the key object to calculate the index of the bucket in order to store and retrieve any key-value pair.

HashMap的工作原理是散列–一种将对象数据映射到某些代表性整数值的算法。散列函数被应用于关键对象,以计算桶的索引,以便存储和检索任何键值对。

Capacity is the number of buckets in the HashMap. The initial capacity is the capacity at the time the Map is created. Finally, the default initial capacity of the HashMap is 16.

容量是HashMap中的桶的数量。初始容量是创建Map时的容量。最后,HashMap的默认初始容量为16。

As the number of elements in the HashMap increases, the capacity is expanded. The load factor is the measure that decides when to increase the capacity of the Map. The default load factor is 75% of the capacity.

随着HashMap中元素数量的增加,容量也会扩大。负载因子是决定何时增加Map容量的措施。默认负载因子是容量的75%。

The threshold of a HashMap is approximately the product of current capacity and load factor. Rehashing is the process of re-calculating the hash code of already stored entries. Simply put, when the number of entries in the hash table exceeds the threshold, the Map is rehashed so that it has approximately twice the number of buckets as before.

HashMap的阈值大约是当前容量和负载系数的乘积。重新洗牌是重新计算已存储条目的哈希代码的过程。简单地说,当哈希表中的条目数量超过阈值时,Map就会被重新洗牌,使其拥有大约两倍于之前的桶的数量。

A collision occurs when a hash function returns the same bucket location for two different keys.

当一个哈希函数为两个不同的键返回相同的桶位置时,就会发生碰撞。

Let’s create our HashMap:

让我们来创建我们的HashMap。

Map<String, String> mapWithDefaultParams = new HashMap<>();

mapWithDefaultParams.put("1", "one");

mapWithDefaultParams.put("2", "two");

mapWithDefaultParams.put("3", "three");

mapWithDefaultParams.put("4", "four");

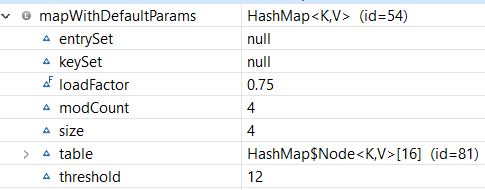

Here is the structure of our Map:

这里是我们的Map的结构。

As we see, our HashMap was created with the default initial capacity (16) and the default load factor (0.75). Also, the threshold is 16 * 0.75 = 12, which means that it will increase the capacity from 16 to 32 after the 12th entry (key-value-pair) is added.

我们看到,我们的HashMap是以默认的初始容量(16)和默认的负载因子(0.75)创建的。另外,阈值是16*0.75=12,这意味着在添加第12个条目(键值对)之后,它的容量将从16增加到32。

4. Custom Initial Capacity and Load Factor

4.定制初始容量和负载系数

In the previous section, we created our HashMap with a default constructor. In the following sections, we’ll see how to create a HashMap passing the initial capacity and load factor to the constructor.

在上一节中,我们用一个默认的构造函数创建了我们的HashMap。在下面的章节中,我们将看到如何创建一个HashMap,将初始容量和负载因子传递给构造函数。

4.1. With Initial Capacity

4.1.具有初始能力

First, let’s create a Map with the initial capacity:

首先,让我们用初始容量创建一个Map。

Map<String, String> mapWithInitialCapacity = new HashMap<>(5);

It will create an empty Map with the initial capacity (5) and the default load factor (0.75).

它将创建一个空的Map,具有初始容量(5)和默认负载系数(0.75)。

4.2. With Initial Capacity and Load Factor

4.2.带初始容量和负载系数

Similarly, we can create our Map using both initial capacity and load factor:

同样地,我们可以使用初始容量和负载系数来创建我们的Map。

Map<String, String> mapWithInitialCapacityAndLF = new HashMap<>(5, 0.5f);

Here, it will create an empty Map with an initial capacity of 5 and a load factor of 0.5.

这里,它将创建一个空的Map,初始容量为5,负载系数为0.5。

5. Performance

5.业绩

Although we have the flexibility to choose the initial capacity and the load factor, we need to pick them wisely. Both of them affect the performance of the Map. Let’s dig in into how these parameters are related to performance.

尽管我们可以灵活地选择初始容量和负载系数,但我们需要明智地选择它们。它们都会影响Map的性能。让我们深入了解这些参数与性能的关系。

5.1. Complexity

5.1.复杂性

As we know, HashMap internally uses hash code as a base for storing key-value pairs. If the hashCode() method is well-written, HashMap will distribute the items across all the buckets. Therefore, HashMap stores and retrieves entries in constant time O(1).

正如我们所知,HashMap内部使用哈希代码作为存储键值对的基础。如果hashCode()方法写得好,HashMap将把项目分布在所有的桶中。因此,HashMap在恒定时间内存储和检索条目O(1)。

However, the problem arises when the number of items is increased and the bucket size is fixed. It will have more items in each bucket and will disturb time complexity.

然而,当项目的数量增加而桶的大小固定时,问题就出现了。它在每个桶中会有更多的项目,并会干扰时间的复杂性。

The solution is that we can increase the number of buckets when the number of items is increased. We can then redistribute the items across all the buckets. In this way, we’ll be able to keep a constant number of items in each bucket and maintain the time complexity of O(1).

解决办法是,当项目数量增加时,我们可以增加桶的数量。然后我们可以在所有的桶中重新分配项目。这样,我们就能在每个桶中保持恒定的项目数量,并保持O(1)的时间复杂性。

Here, the load factor helps us to decide when to increase the number of buckets. With a lower load factor, there will be more free buckets and, hence, fewer chances of a collision. This will help us to achieve better performance for our Map. Hence, we need to keep the load factor low to achieve low time complexity.

在这里,负载系数帮助我们决定何时增加桶的数量。在较低的负载系数下,将有更多的空闲桶,因此,碰撞的机会就会减少。这将帮助我们的Map实现更好的性能。因此,我们需要保持低的负载因子,以实现低的时间复杂度。

A HashMap typically has a space complexity of O(n), where n is the number of entries. A higher value of load factor decreases the space overhead but increases the lookup cost.

HashMap的空间复杂度通常为O(n),其中n是条目的数量。较高的负载因子值会降低空间开销,但会增加查找成本。

5.2. Rehashing

5.2 重新洗牌

When the number of items in the Map crosses the threshold limit, the capacity of the Map is doubled. As discussed earlier, when capacity is increased, we need to equally distribute all the entries (including existing entries and new entries) across all buckets. Here, we need rehashing. That is, for each existing key-value pair, calculate the hash code again with increased capacity as a parameter.

当Map中的条目数量超过阈值限制时,Map的容量会增加一倍。如前所述,当容量增加时,我们需要将所有条目(包括现有条目和新条目)平均分配到所有桶中。这里,我们需要重新洗牌。也就是说,对于每个现有的键值对,以增加的容量为参数再次计算哈希代码。

Basically, when the load factor increases, the complexity increases. Rehashing is done to maintain a low load factor and low complexity for all the operations.

基本来说,当负载系数增加时,复杂性也会增加。重洗是为了保持所有操作的低负载率和低复杂性。。

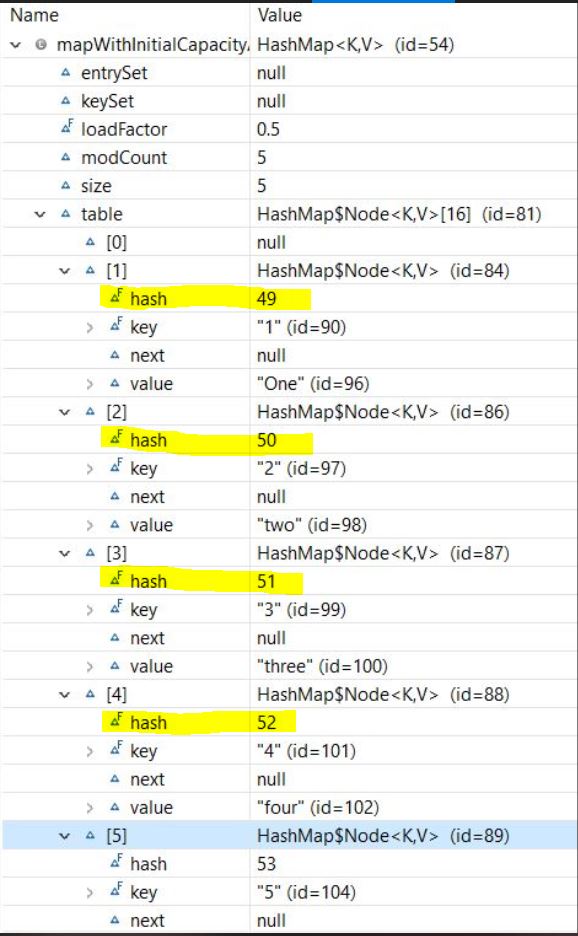

Let’s initialize our Map:

我们来初始化我们的Map。

Map<String, String> mapWithInitialCapacityAndLF = new HashMap<>(5,0.75f);

mapWithInitialCapacityAndLF.put("1", "one");

mapWithInitialCapacityAndLF.put("2", "two");

mapWithInitialCapacityAndLF.put("3", "three");

mapWithInitialCapacityAndLF.put("4", "four");

mapWithInitialCapacityAndLF.put("5", "five");And let’s take a look at the structure of the Map:

让我们看一下Map的结构。

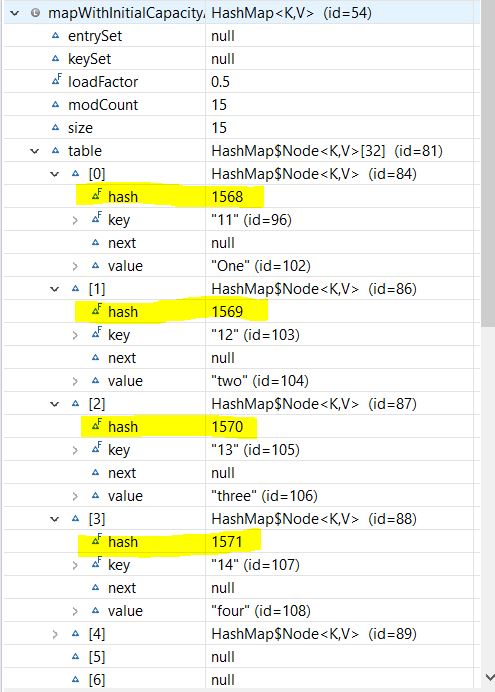

Now, let’s add more entries to our Map:

现在,让我们为我们的Map添加更多条目。

mapWithInitialCapacityAndLF.put("6", "Six");

mapWithInitialCapacityAndLF.put("7", "Seven");

//.. more entries

mapWithInitialCapacityAndLF.put("15", "fifteen");And let’s observe our Map structure again:

让我们再次观察我们的Map结构。

Although rehashing helps to keep low complexity, it’s an expensive process. If we need to store a huge amount of data, we should create our HashMap with sufficient capacity. This is more efficient than automatic rehashing.

虽然重洗有助于保持低复杂度,但这是一个昂贵的过程。如果我们需要存储大量的数据,我们应该创建有足够容量的HashMap。这比自动重新洗牌更有效。

5.3. Collision

5.3.碰撞

Collisions may occur due to a bad hash code algorithm and often slow down the performance of the Map.

由于哈希代码算法不好,可能会发生碰撞,并且经常会拖累Map的性能。

Prior to Java 8, HashMap in Java handles collision by using LinkedList to store map entries. If a key ends up in the same bucket where another entry already exists, it’s added at the head of the LinkedList. In the worst case, this will increase complexity to O(n).

在Java 8之前,Java中的HashMap通过使用LinkedList来存储地图条目来处理碰撞。如果一个键最终出现在已经存在另一个条目的同一个桶中,它将被添加到LinkedList的头部。在最坏的情况下,这将使复杂性增加到O(n)。

To avoid this issue, Java 8 and later versions use a balanced tree (also called a red-black tree) instead of a LinkedList to store collided entries. This improves the worst-case performance of HashMap from O(n) to O(log n).

为了避免这一问题,Java 8 及以后的版本使用平衡树(也称为red-black 树)而不是LinkedList来存储碰撞的条目。这使HashMap的最坏情况下的性能从O(n)提高到O(log n)。

HashMap initially uses the LinkedList. Then when the number of entries crosses a certain threshold, it will replace a LinkedList with a balanced binary tree. The TREEIFY_THRESHOLD constant decides this threshold value. Currently, this value is 8, which means if there are more than 8 elements in the same bucket, Map will use a tree to hold them.

HashMap最初使用LinkedList。当条目数超过某个阈值时,它将用平衡二叉树替换LinkedList。TREEIFY_THRESHOLD 常数决定了这个阈值。目前,这个值是8,这意味着如果同一桶中有超过8个元素,Map将使用一棵树来容纳它们。

6. Conclusion

6.结语

In this article, we discussed one of the most popular data structures: HashMap. We also saw how the load factor together with capacity affects its performance.

在这篇文章中,我们讨论了最流行的数据结构之一。HashMap。我们还看到了负载因子和容量是如何影响其性能的。

As always, the code examples for this article are available over on GitHub.

像往常一样,本文的代码示例可在GitHub上获得。