1. Overview

1.概述

In this tutorial, we’ll discuss message delivery semantics in streaming platforms.

在本教程中,我们将讨论流媒体平台的消息传递语义。

First, we’ll quickly look at events flow through the main components of the streaming platforms. Next, we’ll discuss the common reasons for data loss and duplication in such platforms. Then, we’ll focus on the three major delivery semantics available.

首先,我们将快速查看流媒体平台的主要组件的事件流。接下来,我们将讨论此类平台中数据丢失和重复的常见原因。然后,我们将重点讨论现有的三种主要交付语义。

We’ll discuss how we can achieve these semantics in streaming platforms, as well as how they deal with data loss and duplication issues.

我们将讨论我们如何在流媒体平台中实现这些语义,以及他们如何处理数据丢失和重复问题。

In each of the delivery semantics, we’ll very briefly touch upon the ways to obtain the delivery guarantees in Apache Kafka.

在每一个交付语义中,我们都会非常简要地谈及在Apache Kafka中获得交付保证的方法。

2. Basics of Streaming Platform

2.流媒体平台的基础知识

Simply put, streaming platforms like Apache Kafka and Apache ActiveMQ process events in a real-time or near real-time manner from one or multiple sources (also called producers) and pass them onto one or multiple destinations (also called consumers) for further processing, transformations, analysis, or storage.

简单地说,像Apache Kafka和Apache ActiveMQ这样的流式平台以实时或接近实时的方式处理来自一个或多个来源(也称为生产者)的事件,并将其传递到一个或多个目的地(也称为消费者),以便进一步处理、转换、分析或存储。

Producers and consumers are decoupled via brokers, and this enables scalability.

生产者和消费者通过经纪商进行解耦,这就实现了可扩展性。

Some use cases of streaming applications can be a high volume of user activity tracking in an eCommerce site, financial transactions and fraud detection in a real-time manner, autonomous mobile devices that require real-time processing, etc.

流媒体应用的一些用例可以是电子商务网站中大量的用户活动跟踪,实时的金融交易和欺诈检测,需要实时处理的自主移动设备等。

There are two important considerations in the message delivery platforms:

在信息传递平台上有两个重要的考虑因素。

- accuracy

- latency

Oftentimes, in distributed, real-time systems, we need to make trade-offs between latency and accuracy depending on what’s more important for the system.

很多时候,在分布式实时系统中,我们需要在延迟和准确性之间做出权衡,这取决于什么对系统更重要。

This is where we need to understand the delivery guarantees offered by a streaming platform out of the box or implement the desired one using message metadata and platform configurations.

这时,我们需要了解流媒体平台提供的交付保证,或使用消息元数据和平台配置实现所需的保证。

Next, let’s briefly look at the issues of data loss and duplication in streaming platforms which will then lead us to discuss delivery semantics to manage these issues

接下来,让我们简单了解一下流媒体平台中的数据丢失和重复问题,这将引导我们讨论交付语义来管理这些问题。

3. Possible Data Loss and Duplication Scenarios

3.可能出现的数据丢失和复制的情况

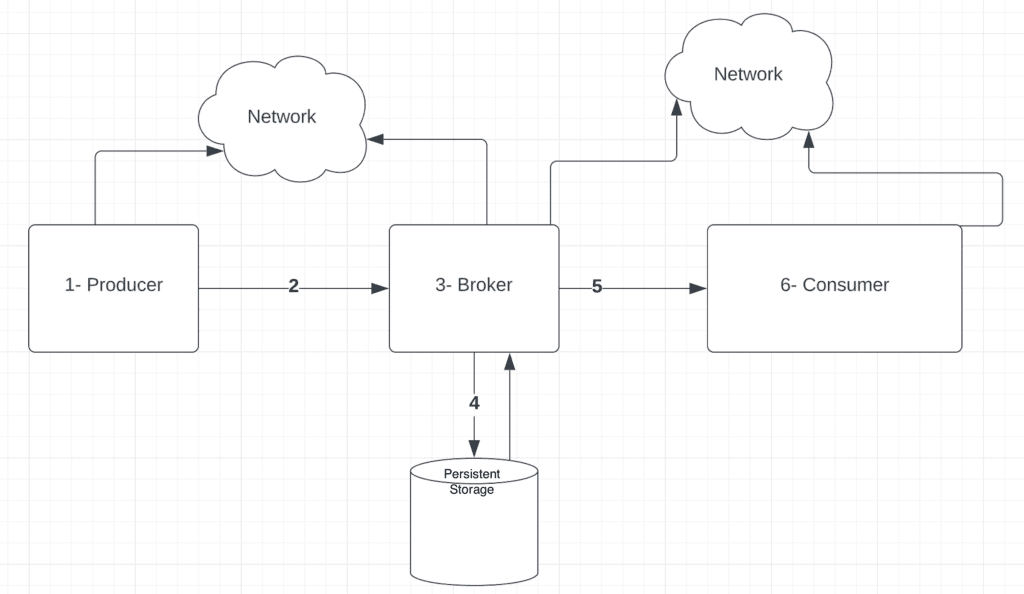

In order to understand the data loss and/or duplications in the streaming platforms, let’s quickly step back and have a look at the high-level flow of events in a streaming platform:

为了理解流媒体平台中的数据丢失和/或重复,让我们迅速退后一步,看看流媒体平台中的高级事件流:。

Here, we can see that there can be potentially multiple points of failure along the flow from a producer to the consumer.

在这里,我们可以看到,从生产者到消费者的流程中可能存在多个故障点。

Oftentimes, this results in issues like data loss, lags, and duplication of messages.

很多时候,这导致了数据丢失、滞后和信息重复等问题。

Let’s focus on each component of the diagram above and see what could go wrong and its possible consequences on the streaming system.

让我们关注上图中的每个组件,看看哪些地方可能出错,以及它对流媒体系统可能产生的后果。

3.1. Producer Failures

3.1.生产者的失败

Produces failures can lead to some issues:

产生的故障会导致一些问题。

- After a message is generated by the producer, it may fail before sending it over the network. This may cause data loss.

- The producer may fail while waiting to receive an acknowledgment from the broker. When the producer recovers, it tries to resend the message assuming missing acknowledgment from the broker. This may cause data duplication at the broker.

3.2. Network Issues Between Producer and Broker

3.2.生产者和经纪人之间的网络问题

There can be a network failure between the producer and broker:

生产者和经纪人之间可能出现网络故障。

- The producer may send a message which never gets to the broker due to network issues.

- There can also be a scenario where the broker receives the message and sends the acknowledgment, but the producer never receives the acknowledgment due to network issues.

In both these cases, the producer will resend the message, which results in data duplication at the broker.

在这两种情况下,生产者将重新发送消息,这导致经纪人的数据重复。

3.3. Broker Failures

3.3.经纪人失败

Similarly, broker failures can also cause data duplication:

同样,经纪人的失败也会造成数据的重复。

- A broker may fail after committing the message to persistent storage and before sending the acknowledgment to the producer. This can cause the resending of data from producers leading to data duplication.

- A broker may be tracking the messages consumers have read so far. The broker may fail before committing this information. This can cause consumers to read the same message multiple times leading to data duplication.

3.4. Message Persistence Issue

3.4.消息持久性问题</b

There may be a failure during writing data to the disk from the in-memory state leading to data loss.

在将数据从内存状态写入磁盘的过程中,可能出现故障,导致数据丢失。

3.5. Network Issues Between the Consumer and the Broker

3.5.消费者和经纪人之间的网络问题

There can be network failure between the broker and the consumer:

在经纪人和消费者之间可能存在网络故障。

- The consumer may never receive the message despite the broker sending the message and recording that it sent the message.

- Similarly, a consumer may send the acknowledgment after receiving the message, but the acknowledgment may never get to the broker.

In both cases, the broker may resend the message leading to data duplication

在这两种情况下,经纪人可能会重新发送消息,导致数据重复。

3.6. Consumer Failures

3.6.消费者的失败

- The consumer may fail before processing the message.

- The consumer may fail before recording in persistence storage that it processed the message.

- The consumer may also fail after recording that it processed the message but before sending the acknowledgment.

This may lead to the consumer requesting the same message from the broker again, causing data duplication.

这可能导致消费者再次向经纪人请求相同的消息,造成数据的重复。

Next, let’s look at the delivery semantics available in streaming platforms to deal with these issues to cater to individual system requirements.

接下来,让我们看看流媒体平台中可用的交付语义,以处理这些问题,满足个别系统的要求。

4. Delivery Semantics

4.交付语义</b

Delivery semantics define how streaming platforms guarantee the delivery of events from source to destination in our streaming applications.

交付语义定义了流媒体平台如何在我们的流媒体应用中保证事件从源头到目的地的交付。

There are three different delivery semantics available:

有三种不同的交付语义可供选择:

- at-most-once

- at-least-once

- exactly-once

4.1. At-Most-Once Delivery

4.1.最多一次传递

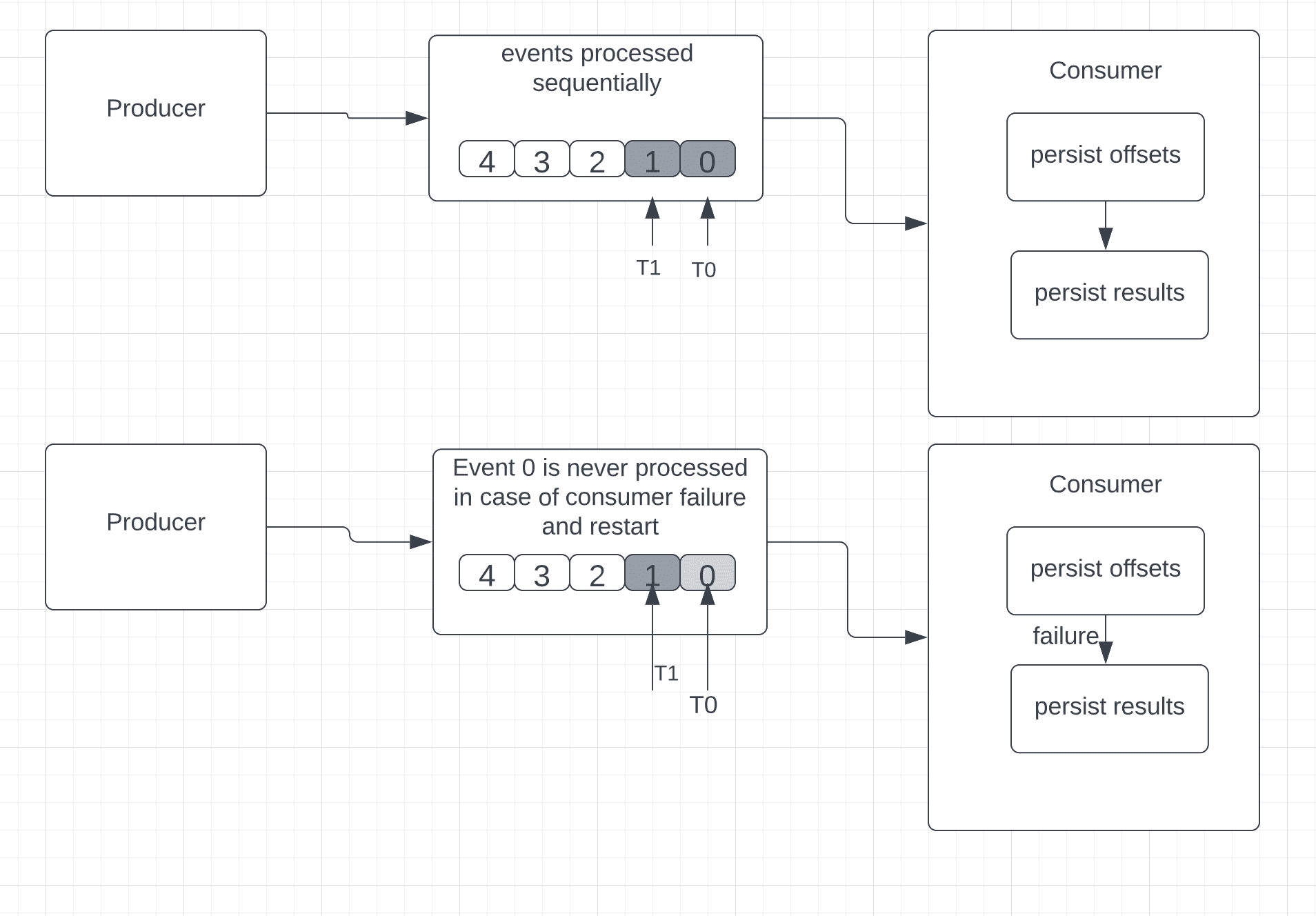

In this approach, the consumer saves the position of the last received event first and then processes it.

在这种方法中,消费者首先保存最后收到的事件的位置,然后再处理它。

Simply put, if the event processing fails in the middle, upon consumer restart, it cannot go back to read the old event.

简单地说,如果事件处理在中途失败,在消费者重新启动时,它不能再去读取旧的事件。

Consequently, there’s no guarantee of successful event processing across all received events.

因此,不能保证所有收到的事件都能成功处理。

At-most semantics are ideal for situations where some data loss is not an issue and accuracy is not mandatory.

在一些数据损失不是问题、准确性不是必须的情况下,最多语义是理想的选择。

Considering the example of Apache Kafka, which uses offsets for messages, the sequence of the At-Most-Once guarantee would be:

考虑到Apache Kafka的例子,它使用消息的偏移量,At-Most-Once保证的顺序将是。

- persist offsets

- persist results

In order to enable At-Most-Once semantics in Kafka, we’ll need to set “enable.auto.commit” to “true” at the consumer.

为了在Kafka中启用最多一次语义,我们需要在消费者处将”enable.auto.commit”设置为”true” 。

If there’s a failure and the consumer restarts, it will look at the last persisted offset. Since the offsets are persisted prior to actual event processing, we cannot establish whether every event received by the consumer was successfully processed or not. In this case, the consumer might end up missing some events.

如果发生了故障,消费者重新启动,它将查看最后一个持久化的偏移。由于偏移量是在实际事件处理之前被持久化的,我们无法确定消费者收到的每个事件是否都被成功处理了。在这种情况下,消费者最终可能会错过一些事件。

Let’s visualize this semantic:

让我们把这个语义可视化:

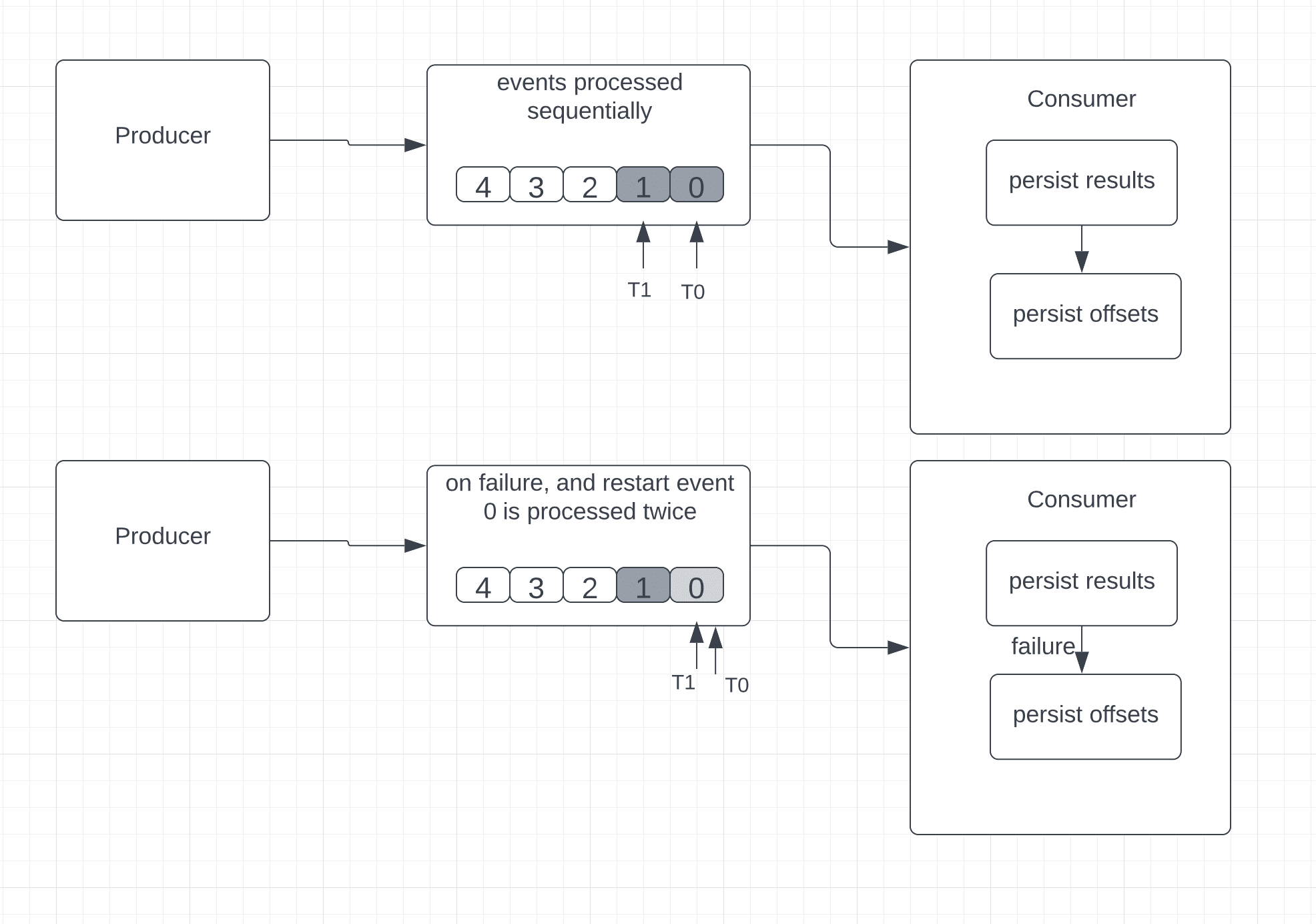

In this approach, the consumer processes the received event, persists the results somewhere, and then finally saves the position of the last received event.

在这种方法中,消费者处理收到的事件,将结果持久化在某个地方,然后最后保存最后收到的事件的位置。

Unlike at-most-once, here, in case of failure, the consumer can read and reprocess the old events.

与most-once不同的是,在这里,如果出现故障,消费者可以读取并重新处理旧事件。

In some scenarios, this can lead to data duplication. Let’s consider the example where the consumer fails after processing and saving an event but before saving the last known event position (also known as the offset).

在某些情况下,这可能导致数据重复。让我们考虑这样一个例子:消费者在处理和保存一个事件之后,但在保存最后一个已知的事件位置(也称为偏移量)之前发生故障。

The consumer would restart and read from the offset. Here, the consumer reprocesses the events more than once because even though the message was successfully processed before the failure, the position of the last received event was not saved successfully:

消费者将重新启动并从偏移量中读取。在这里,消费者重新处理了不止一次的事件,因为即使在故障前成功地处理了消息,最后收到的事件的位置也没有成功保存:。

This approach is ideal for any application that updates a ticker or gauge to show a current value. However, use cases that require accuracy in the aggregations, like sums and counters, are not ideal for at-least-once processing, mainly because duplicate events lead to incorrect results.

这种方法对于任何更新ticker或仪表以显示当前值的应用来说是理想的。然而,需要准确聚合的用例,如总和和计数器,并不适合一次性处理,主要是因为重复的事件会导致错误的结果。

Consequently, in this delivery semantic, no data is lost, but there can be situations where the same event is reprocessed.

因此,在这种传递语义中,没有数据丢失,但可能存在同一事件被重新处理的情况。

In order to avoid processing the same event multiple times, we can use idempotent consumers.

为了避免多次处理同一事件,我们可以使用idempotent消费者。

Essentially an idempotent consumer can consume a message multiple times but only processes it once.

本质上,一个空闲的消费者可以多次消费一个消息,但只处理一次。

The combination of the following approaches enables idempotent consumers in at-least-once delivery:

以下方法的组合能够使消费者在至少一次的交付中无所顾忌:。

- The producer assigns a unique messageId to each message.

- The consumer maintains a record of all processed messages in a database.

- When a new message arrives, the consumer checks it against the existing messageIds in the persistent storage table.

- In case there’s a match, the consumer updates the offset without re-consuming, sends the acknowledgment back, and effectively marks the message as consumed.

- When the event is not already present, a database transaction is started, and a new messageId is inserted. Next, this new message is processed based on whatever business logic is required. On completion of message processing, the transaction’s finally committed

In Kafka, to ensure at-least-once semantics, the producer must wait for the acknowledgment from the broker.

在Kafka中,为了确保最少一次的语义,生产者必须等待来自代理的确认。

The producer resends the message if it doesn’t receive any acknowledgment from the broker.

如果生产者没有收到经纪人的任何确认,就会重新发送消息。

Additionally, as the producer writes messages to the broker in batches, if that write fails and the producer retries, messages within the batch may be written more than once in Kafka.

此外,由于生产者分批向经纪人写入消息,如果该次写入失败,生产者重试,该批中的消息可能在Kafka中被写入不止一次。

However, to avoid duplication, Kafka introduced the feature of the idempotent producer.

然而,为了避免重复,Kafka引入了idempotent producer的功能。

Essentially, in order to enable at-least-once semantics in Kafka, we’ll need to:

基本上,为了在Kafka中启用一次性语义,我们需要。

- set the property “ack” to value “1” on the producer side

- set “enable.auto.commit” property to value “false” on the consumer side.

- set “enable.idempotence” property to value “true“

- attach the sequence number and producer id to each message from the producer

Kafka Broker can identify the message duplication on a topic using the sequence number and producer id.

Kafka Broker可以使用序列号和生产者ID来识别一个主题上的消息重复。

4.3. Exactly-Once Delivery

4.3.一次性交货

This delivery guarantee is similar to at-least-once semantics. First, the event received is processed, and then the results are stored somewhere. In case of failure and restart, the consumer can reread and reprocess the old events. However, unlike at-least-once processing, any duplicate events are dropped and not processed, resulting in exactly-once processing.

这种交付保证类似于at-least-once语义。首先,收到的事件被处理,然后将结果存储在某个地方。在失败和重启的情况下,消费者可以重读并重新处理旧事件。然而,u与最少一次处理不同,任何重复的事件都会被丢弃而不被处理,从而导致完全一次处理。

This is ideal for any application in which accuracy is important, such as applications involving aggregations such as accurate counters or anything else that needs an event processed only once and without loss.

这对于任何对准确性要求很高的应用来说都是非常理想的,例如涉及聚合的应用,如精确的计数器或其他任何需要对事件只处理一次且无损失的应用。

The sequence proceeds as follows:

顺序如下:

- persist results

- persist offsets

Let’s see what happens when a consumer fails after processing the events but without saving the offsets in the diagram below:

让我们看看当消费者在处理事件后失败,但没有保存下图中的偏移量时会发生什么:

We can remove duplication in exactly-once semantics by having these:

我们可以通过拥有这些来消除完全一次性语义中的重复。

- idempotent updates – we’ll save results on a unique key or ID that is generated. In the case of duplication, the generated key or ID will already be in the results (a database, for example), so the consumer can remove the duplicate without updating the results

- transactional updates – we’ll save the results in batches that require a transaction to begin and a transaction to commit, so in the event of a commit, the events will be successfully processed. Here we will be simply dropping the duplicate events without any results update.

Let’s see what we need to do to enable exactly-once semantics in Kafka:

让我们看看我们需要做些什么来启用Kafka中的exactly-once语义。

- enable idempotent producer and transactional feature on the producer by setting the unique value for “transaction.id” for each producer

- enable transaction feature at the consumer by setting property “isolation.level” to value “read_committed“

5. Conclusion

5.总结

In this article, we’ve seen the differences between the three delivery semantics used in streaming platforms.

在这篇文章中,我们已经看到了流媒体平台中使用的三种交付语义之间的差异。

After a brief overview of event flow in a streaming platform, we looked at the data loss and duplication issues. Then, we saw how to mitigate these issues using various delivery semantics. We then looked into at-least-once delivery, followed by at-most-once and finally exactly-once delivery semantics.

在简要介绍了流媒体平台中的事件流后,我们看了数据丢失和重复问题。然后,我们看到如何使用各种交付语义来缓解这些问题。然后,我们研究了最少一次的交付,接着是最多一次的交付,最后是完全一次的交付语义。