1. Overview

1.概述

In this tutorial, we’ll explore how we can improve client retries with two different strategies: exponential backoff and jitter.

在本教程中,我们将探讨如何用两种不同的策略来改善客户端重试:指数退避和抖动。

2. Retry

2.重试

In a distributed system, network communication among the numerous components can fail anytime. Client applications deal with these failures by implementing retries.

在一个分布式系统中,众多组件之间的网络通信随时都可能失败。客户端应用程序通过实现重试来处理这些故障。

Let’s assume that we have a client application that invokes a remote service – the PingPongService.

让我们假设我们有一个客户端应用程序,它调用了一个远程服务–PingPongService。

interface PingPongService {

String call(String ping) throws PingPongServiceException;

}The client application must retry if the PingPongService returns a PingPongServiceException. In the following sections, we’ll look at ways to implement client retries.

如果PingPongService返回一个PingPongServiceException,客户端应用程序必须重试。在下面的章节中,我们将探讨实现客户端重试的方法。

3. Resilience4j Retry

3.Resilience4j重试

For our example, we’ll be using the Resilience4j library, particularly its retry module. We’ll need to add the resilience4j-retry module to our pom.xml:

在我们的示例中,我们将使用Resilience4j库,特别是其retry模块。我们需要将resilience4j-retry模块添加到我们的pom.xml。

<dependency>

<groupId>io.github.resilience4j</groupId>

<artifactId>resilience4j-retry</artifactId>

</dependency>For a refresher on using retries, don’t forget to check out our Guide to Resilience4j.

关于使用重试的复习,不要忘记查看我们的Guide to Resilience4j。

4. Exponential Backoff

4.指数退避

Client applications must implement retries responsibly. When clients retry failed calls without waiting, they may overwhelm the system, and contribute to further degradation of the service that is already under distress.

客户端应用程序必须负责任地实施重试。当客户端不等待就重试失败的呼叫时,他们可能会使系统不堪重负,并导致已经处于困境的服务进一步退化。

Exponential backoff is a common strategy for handling retries of failed network calls. In simple terms, the clients wait progressively longer intervals between consecutive retries:

指数退避是处理网络调用失败重试的一种常见策略。简单地说,客户在连续重试之间等待的时间间隔逐渐变长。

wait_interval = base * multiplier^n

where,

其中。

- base is the initial interval, ie, wait for the first retry

- n is the number of failures that have occurred

- multiplier is an arbitrary multiplier that can be replaced with any suitable value

With this approach, we provide a breathing space to the system to recover from intermittent failures, or even more severe problems.

通过这种方法,我们为系统提供了一个喘息的空间,以便从间歇性的故障,甚至更严重的问题中恢复。

We can use the exponential backoff algorithm in Resilience4j retry by configuring its IntervalFunction that accepts an initialInterval and a multiplier.

我们可以通过配置Resilience4j重试中的IntervalFunction,接受initialInterval和multiplier,来使用指数后退算法。

The IntervalFunction is used by the retry mechanism as a sleep function:

IntervalFunction被重试机制作为睡眠函数使用。

IntervalFunction intervalFn =

IntervalFunction.ofExponentialBackoff(INITIAL_INTERVAL, MULTIPLIER);

RetryConfig retryConfig = RetryConfig.custom()

.maxAttempts(MAX_RETRIES)

.intervalFunction(intervalFn)

.build();

Retry retry = Retry.of("pingpong", retryConfig);

Function<String, String> pingPongFn = Retry

.decorateFunction(retry, ping -> service.call(ping));

pingPongFn.apply("Hello");

Let’s simulate a real-world scenario, and assume that we have several clients invoking the PingPongService concurrently:

让我们模拟一个真实世界的场景,假设我们有几个客户端同时调用PingPongService。

ExecutorService executors = newFixedThreadPool(NUM_CONCURRENT_CLIENTS);

List<Callable> tasks = nCopies(NUM_CONCURRENT_CLIENTS, () -> pingPongFn.apply("Hello"));

executors.invokeAll(tasks);

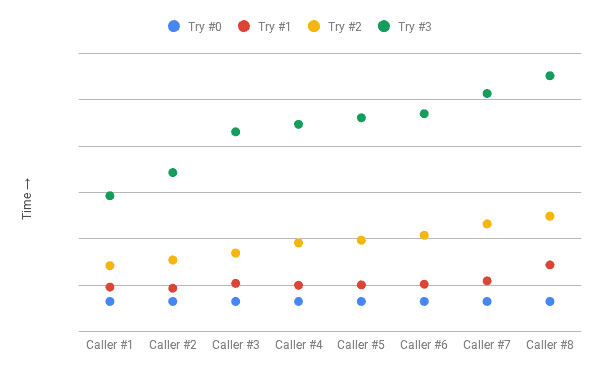

Let’s look at the remote invocation logs for NUM_CONCURRENT_CLIENTS equal to 4:

让我们看一下NUM_CONCURRENT_CLIENTS等于4的远程调用日志。

[thread-1] At 00:37:42.756

[thread-2] At 00:37:42.756

[thread-3] At 00:37:42.756

[thread-4] At 00:37:42.756

[thread-2] At 00:37:43.802

[thread-4] At 00:37:43.802

[thread-1] At 00:37:43.802

[thread-3] At 00:37:43.802

[thread-2] At 00:37:45.803

[thread-1] At 00:37:45.803

[thread-4] At 00:37:45.803

[thread-3] At 00:37:45.803

[thread-2] At 00:37:49.808

[thread-3] At 00:37:49.808

[thread-4] At 00:37:49.808

[thread-1] At 00:37:49.808

We can see a clear pattern here – the clients wait for exponentially growing intervals, but all of them call the remote service at precisely the same time on each retry (collisions).

我们在这里可以看到一个清晰的模式–客户端等待的时间间隔呈指数级增长,但所有客户端在每次重试时都精确地调用远程服务(碰撞)。

We have addressed only a part of the problem – we do not hammer the remote service with retries anymore, but instead of spreading the workload over time, we have interspersed periods of work with more idle time. This behavior is akin to the Thundering Herd Problem.

我们只解决了问题的一部分–我们不再用重试来敲打远程服务,但我们并没有将工作量分散在一段时间内,而是将工作时间与更多的空闲时间穿插在一起。这种行为类似于Thundering Herd Problem。

5. Introducing Jitter

5.抖动的介绍

In our previous approach, the client waits are progressively longer but still synchronized. Adding jitter provides a way to break the synchronization across the clients thereby avoiding collisions. In this approach, we add randomness to the wait intervals.

在我们之前的方法中,客户端的等待时间逐渐变长,但仍然是同步的。添加抖动提供了一种方法来打破整个客户端的同步,从而避免碰撞。在这种方法中,我们给等待间隔添加随机性。

wait_interval = (base * 2^n) +/- (random_interval)

where, random_interval is added (or subtracted) to break the synchronization across clients.

其中,random_interval被添加(或减去),以打破跨客户端的同步。

We’ll not go into the mechanics of computing the random interval, but randomization must space out the spikes to a much smoother distribution of client calls.

我们不会去研究计算随机区间的机械原理,但随机化必须把尖峰的空间拉开,使客户端调用的分布更加平滑。

We can use the exponential backoff with jitter in Resilience4j retry by configuring an exponential random backoff IntervalFunction that also accepts a randomizationFactor:

我们可以在Resilience4j重试中使用带抖动的指数式后退,方法是配置一个指数式随机后退IntervalFunction,该函数也接受一个randomizationFactor。

IntervalFunction intervalFn =

IntervalFunction.ofExponentialRandomBackoff(INITIAL_INTERVAL, MULTIPLIER, RANDOMIZATION_FACTOR);

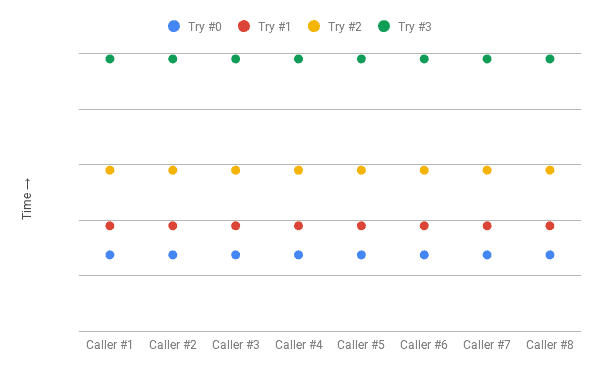

Let’s return to our real-world scenario, and look at the remote invocation logs with jitter:

让我们回到我们的真实世界场景,看看带有抖动的远程调用日志。

[thread-2] At 39:21.297

[thread-4] At 39:21.297

[thread-3] At 39:21.297

[thread-1] At 39:21.297

[thread-2] At 39:21.918

[thread-3] At 39:21.868

[thread-4] At 39:22.011

[thread-1] At 39:22.184

[thread-1] At 39:23.086

[thread-5] At 39:23.939

[thread-3] At 39:24.152

[thread-4] At 39:24.977

[thread-3] At 39:26.861

[thread-1] At 39:28.617

[thread-4] At 39:28.942

[thread-2] At 39:31.039Now we have a much better spread. We have eliminated both collisions and idle time, and end up with an almost constant rate of client calls, barring the initial surge.

现在我们有一个更好的分布。我们已经消除了碰撞和空闲时间,最终客户的呼叫率几乎保持不变,除了最初的激增。

Note: We’ve overstated the interval for illustration, and in real-world scenarios, we would have smaller gaps.

注意:为了说明问题,我们夸大了时间间隔,在现实世界中,我们的差距会更小。

6. Conclusion

6.结语

In this tutorial, we’ve explored how we can improve how client applications retry failed calls by augmenting exponential backoff with jitter.

在本教程中,我们探讨了如何通过用抖动增加指数退避来改善客户端应用程序重试失败的调用。

The source code for the samples used in the tutorial is available over on GitHub.

教程中使用的样本的源代码可在GitHub上获得over>。