1. Overview

1.概述

In this tutorial, we’ll understand how to leverage Apache Spark MLlib to develop machine learning products. We’ll develop a simple machine learning product with Spark MLlib to demonstrate the core concepts.

在本教程中,我们将了解如何利用Apache Spark MLlib>来开发机器学习产品。我们将利用Spark MLlib开发一个简单的机器学习产品来演示核心概念。

2. A Brief Primer to Machine Learning

2.机器学习的简单入门

Machine Learning is part of a broader umbrella known as Artificial Intelligence. Machine learning refers to the study of statistical models to solve specific problems with patterns and inferences. These models are “trained” for the specific problem by the means of training data drawn from the problem space.

机器学习是被称为人工智能的更广泛总括的一部分。机器学习是指研究统计模型以解决特定问题的模式和推论。这些模型是通过从问题空间提取的训练数据为特定问题 “训练 “的。

We’ll see what exactly this definition entails as we take on our example.

在我们的例子中,我们将看到这个定义的具体内容。

2.1. Machine Learning Categories

2.1.机器学习类别

We can broadly categorize machine learning into supervised and unsupervised categories based on the approach. There are other categories as well, but we’ll keep ourselves to these two:

我们可以根据方法大致将机器学习分为有监督和无监督类别。还有其他类别,但我们将保持自己在这两个类别。

- Supervised learning works with a set of data that contains both the inputs and the desired output — for instance, a data set containing various characteristics of a property and the expected rental income. Supervised learning is further divided into two broad sub-categories called classification and regression:

- Classification algorithms are related to categorical output, like whether a property is occupied or not

- Regression algorithms are related to a continuous output range, like the value of a property

- Unsupervised learning, on the other hand, works with a set of data which only have input values. It works by trying to identify the inherent structure in the input data. For instance, finding different types of consumers through a data set of their consumption behavior.

2.2. Machine Learning Workflow

2.2.机器学习工作流程

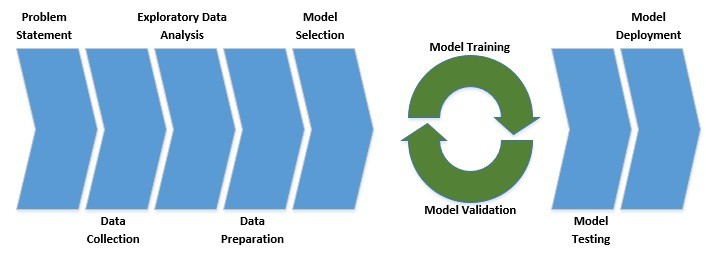

Machine learning is truly an inter-disciplinary area of study. It requires knowledge of the business domain, statistics, probability, linear algebra, and programming. As this can clearly get overwhelming, it’s best to approach this in an orderly fashion, what we typically call a machine learning workflow:

机器学习确实是一个跨学科的研究领域。它需要商业领域、统计学、概率、线性代数和编程方面的知识。由于这显然会让人不知所措,最好以一种有序的方式来处理这个问题,我们通常称之为机器学习工作流程。

As we can see, every machine learning project should start with a clearly defined problem statement. This should be followed by a series of steps related to data that can potentially answer the problem.

正如我们所看到的,每个机器学习项目都应该从一个明确定义的问题陈述开始。之后应该有一系列与可能回答该问题的数据有关的步骤。

Then we typically select a model looking at the nature of the problem. This is followed by a series of model training and validation, which is known as model fine-tuning. Finally, we test the model on previously unseen data and deploy it to production if satisfactory.

然后我们通常会根据问题的性质来选择一个模型。接下来是一系列的模型训练和验证,这被称为模型微调。最后,我们在以前未见过的数据上测试该模型,如果满意,则将其部署到生产中。

3. What Is Spark MLlib?

3.什么是Spark MLlib?

Spark MLlib is a module on top of Spark Core that provides machine learning primitives as APIs. Machine learning typically deals with a large amount of data for model training.

Spark MLlib是Spark Core之上的一个模块,它以API的形式提供机器学习原语。机器学习通常要处理大量的数据进行模型训练。

The base computing framework from Spark is a huge benefit. On top of this, MLlib provides most of the popular machine learning and statistical algorithms. This greatly simplifies the task of working on a large-scale machine learning project.

来自Spark的基础计算框架是一个巨大的好处。在此基础上,MLlib提供了大部分流行的机器学习和统计算法。这大大简化了从事大规模机器学习项目的任务。

4. Machine Learning with MLlib

4.用MLlib进行机器学习

We now have enough context on machine learning and how MLlib can help in this endeavor. Let’s get started with our basic example of implementing a machine learning project with Spark MLlib.

我们现在已经有了足够的关于机器学习的背景,以及MLlib如何在这一努力中提供帮助。让我们开始用Spark MLlib实现一个机器学习项目的基本例子。

If we recall from our discussion on machine learning workflow, we should start with a problem statement and then move on to data. Fortunately for us, we’ll pick the “hello world” of machine learning, Iris Dataset. This is a multivariate labeled dataset, consisting of length and width of sepals and petals of different species of Iris.

如果我们回顾一下我们关于机器学习工作流程的讨论,我们应该从问题陈述开始,然后再进入数据。幸运的是,我们将选择机器学习的 “你好世界”,Iris Dataset。这是一个多变量标记的数据集,由不同种类的鸢尾花的萼片和花瓣的长度和宽度组成。

This gives our problem objective: can we predict the species of an Iris from the length and width of its sepal and petal?

这就为我们的问题提供了目标。我们能否根据萼片和花瓣的长度和宽度预测鸢尾花的种类?

4.1. Setting the Dependencies

4.1.设置依赖关系

First, we have to define the following dependency in Maven to pull the relevant libraries:

首先,我们必须在Maven中定义以下依赖关系,以提取相关库。

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_2.11</artifactId>

<version>2.4.3</version>

<scope>provided</scope>

</dependency>And we need to initialize the SparkContext to work with Spark APIs:

而我们需要初始化SparkContext,以便与Spark APIs一起工作。

SparkConf conf = new SparkConf()

.setAppName("Main")

.setMaster("local[2]");

JavaSparkContext sc = new JavaSparkContext(conf);4.2. Loading the Data

4.2.加载数据

First things first, we should download the data, which is available as a text file in CSV format. Then we have to load this data in Spark:

首先,我们应该下载数据,它可以是CSV格式的文本文件。然后,我们必须在Spark中加载这些数据。

String dataFile = "data\\iris.data";

JavaRDD<String> data = sc.textFile(dataFile);Spark MLlib offers several data types, both local and distributed, to represent the input data and corresponding labels. The simplest of the data types are Vector:

Spark MLlib提供了几种数据类型,包括本地的和分布式的,以表示输入数据和相应的标签。其中最简单的数据类型是Vector。

JavaRDD<Vector> inputData = data

.map(line -> {

String[] parts = line.split(",");

double[] v = new double[parts.length - 1];

for (int i = 0; i < parts.length - 1; i++) {

v[i] = Double.parseDouble(parts[i]);

}

return Vectors.dense(v);

});Note that we’ve included only the input features here, mostly to perform statistical analysis.

请注意,我们在这里只包括输入特征,主要是为了进行统计分析。

A training example typically consists of multiple input features and a label, represented by the class LabeledPoint:

一个训练实例通常包括多个输入特征和一个标签,由LabeledPoint类代表。

Map<String, Integer> map = new HashMap<>();

map.put("Iris-setosa", 0);

map.put("Iris-versicolor", 1);

map.put("Iris-virginica", 2);

JavaRDD<LabeledPoint> labeledData = data

.map(line -> {

String[] parts = line.split(",");

double[] v = new double[parts.length - 1];

for (int i = 0; i < parts.length - 1; i++) {

v[i] = Double.parseDouble(parts[i]);

}

return new LabeledPoint(map.get(parts[parts.length - 1]), Vectors.dense(v));

});Our output label in the dataset is textual, signifying the species of Iris. To feed this into a machine learning model, we have to convert this into numeric values.

我们在数据集中的输出标签是文本的,标志着鸢尾花的种类。为了将其输入机器学习模型,我们必须将其转换为数字值。

4.3. Exploratory Data Analysis

4.3.探索性数据分析

Exploratory data analysis involves analyzing the available data. Now, machine learning algorithms are sensitive towards data quality, hence a higher quality data has better prospects for delivering the desired outcome.

探索性数据分析涉及到对现有数据的分析。现在,机器学习算法对数据质量很敏感,因此,高质量的数据有更好的前景来提供所需的结果。

Typical analysis objectives include removing anomalies and detecting patterns. This even feeds into the critical steps of feature engineering to arrive at useful features from the available data.

典型的分析目标包括消除异常现象和检测模式。这甚至可以反馈到特征工程的关键步骤中,以便从现有数据中得出有用的特征。

Our dataset, in this example, is small and well-formed. Hence we don’t have to indulge in a lot of data analysis. Spark MLlib, however, is equipped with APIs to offer quite an insight.

在这个例子中,我们的数据集很小,而且形式良好。因此,我们没有必要沉迷于大量的数据分析。然而,Spark MLlib配备了API,可以提供相当的洞察力。

Let’s begin with some simple statistical analysis:

让我们从一些简单的统计分析开始。

MultivariateStatisticalSummary summary = Statistics.colStats(inputData.rdd());

System.out.println("Summary Mean:");

System.out.println(summary.mean());

System.out.println("Summary Variance:");

System.out.println(summary.variance());

System.out.println("Summary Non-zero:");

System.out.println(summary.numNonzeros());Here, we’re observing the mean and variance of the features we have. This is helpful in determining if we need to perform normalization of features. It’s useful to have all features on a similar scale. We are also taking a note of non-zero values, which can adversely impact model performance.

在这里,我们要观察我们所拥有的特征的平均值和方差。这有助于确定我们是否需要对特征进行规范化处理。让所有的特征处于一个类似的尺度上是很有用的。我们还注意到非零值,这可能会对模型性能产生不利影响。

Here is the output for our input data:

下面是我们输入数据的输出。

Summary Mean:

[5.843333333333332,3.0540000000000003,3.7586666666666666,1.1986666666666668]

Summary Variance:

[0.6856935123042509,0.18800402684563744,3.113179418344516,0.5824143176733783]

Summary Non-zero:

[150.0,150.0,150.0,150.0]Another important metric to analyze is the correlation between features in the input data:

另一个需要分析的重要指标是输入数据中的特征之间的相关性。

Matrix correlMatrix = Statistics.corr(inputData.rdd(), "pearson");

System.out.println("Correlation Matrix:");

System.out.println(correlMatrix.toString());A high correlation between any two features suggests they are not adding any incremental value and one of them can be dropped. Here is how our features are correlated:

任何两个特征之间的高相关性表明它们没有增加任何增量价值,其中一个可以被放弃。下面是我们的特征是如何关联的。

Correlation Matrix:

1.0 -0.10936924995064387 0.8717541573048727 0.8179536333691672

-0.10936924995064387 1.0 -0.4205160964011671 -0.3565440896138163

0.8717541573048727 -0.4205160964011671 1.0 0.9627570970509661

0.8179536333691672 -0.3565440896138163 0.9627570970509661 1.04.4. Splitting the Data

4.4.分割数据

If we recall our discussion of machine learning workflow, it involves several iterations of model training and validation followed by final testing.

如果我们回顾一下我们对机器学习工作流程的讨论,它涉及到模型训练和验证的几个迭代,然后是最终测试。

For this to happen, we have to split our training data into training, validation, and test sets. To keep things simple, we’ll skip the validation part. So, let’s split our data into training and test sets:

要做到这一点,我们必须将我们的训练数据分成训练、验证和测试集。为了保持简单,我们将跳过验证的部分。所以,让我们把我们的数据分成训练集和测试集。

JavaRDD<LabeledPoint>[] splits = parsedData.randomSplit(new double[] { 0.8, 0.2 }, 11L);

JavaRDD<LabeledPoint> trainingData = splits[0];

JavaRDD<LabeledPoint> testData = splits[1];4.5. Model Training

4.5.模型训练

So, we’ve reached a stage where we’ve analyzed and prepared our dataset. All that’s left is to feed this into a model and start the magic! Well, easier said than done. We need to pick a suitable algorithm for our problem – recall the different categories of machine learning we spoke of earlier.

所以,我们已经达到了分析和准备我们的数据集的阶段。剩下的就是把这些数据输入到模型中,然后开始施展魔法!说起来容易做起来难。嗯,说起来容易做起来难。我们需要为我们的问题挑选一个合适的算法–回顾一下我们之前谈到的机器学习的不同类别。

It isn’t difficult to understand that our problem fits into classification within the supervised category. Now, there are quite a few algorithms available for use under this category.

不难理解,我们的问题符合监督类别中的分类。现在,这个类别下有相当多的算法可供使用。

The simplest of them is Logistic Regression (let the word regression not confuse us; it is, after all, a classification algorithm):

其中最简单的是Logistic回归(让回归这个词不要迷惑我们,它毕竟是一种分类算法)。

LogisticRegressionModel model = new LogisticRegressionWithLBFGS()

.setNumClasses(3)

.run(trainingData.rdd());Here, we are using a three-class Limited Memory BFGS based classifier. The details of this algorithm are beyond the scope of this tutorial, but this is one of the most widely used ones.

在这里,我们使用的是一个基于三类有限内存BFGS的分类器。这种算法的细节超出了本教程的范围,但这是最广泛使用的算法之一。

4.6. Model Evaluation

4.6.模型评估

Remember that model training involves multiple iterations, but for simplicity, we’ve just used a single pass here. Now that we’ve trained our model, it’s time to test this on the test dataset:

请记住,模型训练涉及多次迭代,但为了简单起见,我们在这里只用了一次。现在我们已经训练了我们的模型,是时候在测试数据集上测试一下了。

JavaPairRDD<Object, Object> predictionAndLabels = testData

.mapToPair(p -> new Tuple2<>(model.predict(p.features()), p.label()));

MulticlassMetrics metrics = new MulticlassMetrics(predictionAndLabels.rdd());

double accuracy = metrics.accuracy();

System.out.println("Model Accuracy on Test Data: " + accuracy);Now, how do we measure the effectiveness of a model? There are several metrics that we can use, but one of the simplest is Accuracy. Simply put, accuracy is a ratio of the correct number of predictions and the total number of predictions. Here is what we can achieve in a single run of our model:

现在,我们如何衡量一个模型的有效性?有几个指标可以使用,但其中一个最简单的指标是准确率。简单地说,准确率是正确的预测数与总预测数的比率。下面是我们的模型在一次运行中可以达到的效果。

Model Accuracy on Test Data: 0.9310344827586207Note that this will vary slightly from run to run due to the stochastic nature of the algorithm.

请注意,由于算法的随机性,这在不同的运行中会略有不同。

However, accuracy is not a very effective metric in some problem domains. Other more sophisticated metrics are Precision and Recall (F1 Score), ROC Curve, and Confusion Matrix.

然而,在某些问题领域,准确度并不是一个非常有效的指标。其他更复杂的指标是精确度和召回率(F1得分)、ROC曲线和混淆矩阵。

4.7. Saving and Loading the Model

4.7.保存和加载模型

Finally, we often need to save the trained model to the filesystem and load it for prediction on production data. This is trivial in Spark:

最后,我们经常需要把训练好的模型保存到文件系统中,然后加载到生产数据上进行预测。这在Spark中是微不足道的。

model.save(sc, "model\\logistic-regression");

LogisticRegressionModel sameModel = LogisticRegressionModel

.load(sc, "model\\logistic-regression");

Vector newData = Vectors.dense(new double[]{1,1,1,1});

double prediction = sameModel.predict(newData);

System.out.println("Model Prediction on New Data = " + prediction);So, we’re saving the model to the filesystem and loading it back. After loading, the model can be straight away used to predict output on new data. Here is a sample prediction on random new data:

所以,我们要把模型保存到文件系统中,然后把它加载回来。加载后,该模型可以直接用于预测新数据的输出。下面是一个对随机的新数据进行预测的例子。

Model Prediction on New Data = 2.05. Beyond the Primitive Example

5.超越原始的例子

While the example we went through covers the workflow of a machine learning project broadly, it leaves a lot of subtle and important points. While it isn’t possible to discuss them in detail here, we can certainly go through some of the important ones.

虽然我们经历的例子大致涵盖了机器学习项目的工作流程,但它留下了很多微妙而重要的要点。虽然在这里不可能详细讨论它们,但我们当然可以通过一些重要的内容。

Spark MLlib through its APIs has extensive support in all these areas.

Spark MLlib通过其API在所有这些领域都有广泛的支持。

5.1. Model Selection

5.1.模型选择

Model selection is often one of the complex and critical tasks. Training a model is an involved process and is much better to do on a model that we’re more confident will produce the desired results.

模型选择往往是复杂而关键的任务之一。训练一个模型是一个复杂的过程,在一个我们更有信心能产生预期结果的模型上进行训练要好得多。

While the nature of the problem can help us identify the category of machine learning algorithm to pick from, it isn’t a job fully done. Within a category like classification, as we saw earlier, there are often many possible different algorithms and their variations to choose from.

虽然问题的性质可以帮助我们确定要挑选的机器学习算法的类别,但这并不是一项完全的工作。正如我们之前看到的,在分类这样的类别中,往往有许多可能的不同算法及其变化可供选择。

Often the best course of action is quick prototyping on a much smaller set of data. A library like Spark MLlib makes the job of quick prototyping much easier.

通常情况下,最好的行动方案是在更小的数据集上快速建立原型。像Spark MLlib这样的库使快速原型设计的工作变得更加容易。

5.2. Model Hyper-Parameter Tuning

5.2 模型超参数调谐

A typical model consists of features, parameters, and hyper-parameters. Features are what we feed into the model as input data. Model parameters are variables which model learns during the training process. Depending on the model, there are certain additional parameters that we have to set based on experience and adjust iteratively. These are called model hyper-parameters.

一个典型的模型由特征、参数和超参数组成。特征是我们作为输入数据送入模型的东西。模型参数是模型在训练过程中学习的变量。根据不同的模型,有一些额外的参数,我们必须根据经验来设置并反复调整。这些被称为模型超参数。

For instance, the learning rate is a typical hyper-parameter in gradient-descent based algorithms. Learning rate controls how fast parameters are adjusted during training cycles. This has to be aptly set for the model to learn effectively at a reasonable pace.

例如,学习率是基于梯度白化算法的一个典型的超参数。学习率控制着在训练周期内调整参数的速度。为了让模型以合理的速度有效地学习,必须恰当地设置这个参数。

While we can begin with an initial value of such hyper-parameters based on experience, we have to perform model validation and manually tune them iteratively.

虽然我们可以根据经验从这种超参数的初始值开始,但我们必须进行模型验证并手动反复调整。

5.3. Model Performance

5.3.模型性能

A statistical model, while being trained, is prone to overfitting and underfitting, both causing poor model performance. Underfitting refers to the case where the model does not pick the general details from the data sufficiently. On the other hand, overfitting happens when the model starts to pick up noise from the data as well.

一个统计模型在被训练时,容易出现过拟合和欠拟合,两者都会导致模型性能不佳。欠拟合指的是模型没有充分地从数据中提取一般的细节。另一方面,当模型也开始从数据中提取噪声时,就会发生过拟合。

There are several methods for avoiding the problems of underfitting and overfitting, which are often employed in combination. For instance, to counter overfitting, the most employed techniques include cross-validation and regularization. Similarly, to improve underfitting, we can increase the complexity of the model and increase the training time.

有几种方法可以避免欠拟合和过拟合的问题,这些方法经常被结合使用。例如,为了应对过度拟合,最常用的技术包括交叉验证和正则化。同样地,为了改善欠拟合,我们可以提高模型的复杂性,增加训练时间。

Spark MLlib has fantastic support for most of these techniques like regularization and cross-validation. In fact, most of the algorithms have default support for them.

Spark MLlib对大多数这些技术都有很好的支持,比如正则化和交叉验证。事实上,大多数的算法都有对它们的默认支持。

6. Spark MLlib in Comparision

6.Spark MLlib的比较

While Spark MLlib is quite a powerful library for machine learning projects, it is certainly not the only one for the job. There are quite a number of libraries available in different programming languages with varying support. We’ll go through some of the popular ones here.

虽然Spark MLlib是一个相当强大的机器学习项目的库,但它肯定不是唯一的工作。在不同的编程语言中,有相当多的库可以使用,并有不同的支持。我们将在这里介绍一些流行的库。

6.1. Tensorflow/Keras

6.1.Tensorflow/Keras

Tensorflow is an open-source library for dataflow and differentiable programming, widely employed for machine learning applications. Together with its high-level abstraction, Keras, it is a tool of choice for machine learning. They are primarily written in Python and C++ and primarily used in Python. Unlike Spark MLlib, it does not have a polyglot presence.

Tensorflow是一个开源的数据流和可微分编程的库,广泛用于机器学习应用。连同其高级抽象Keras,它是机器学习的首选工具。它们主要是用Python和C++编写的,并主要在Python中使用。与Spark MLlib不同,它没有多角化的存在。

6.2. Theano

6.2.Theano

Theano is another Python-based open-source library for manipulating and evaluating mathematical expressions – for instance, matrix-based expressions, which are commonly used in machine learning algorithms. Unlike Spark MLlib, Theano again is primarily used in Python. Keras, however, can be used together with a Theano back end.

Theano是另一个基于Python的开源库,用于操作和评估数学表达式 – 例如,基于矩阵的表达式,这在机器学习算法中是常用的。与Spark MLlib不同,Theano同样主要用于Python。然而,Keras可以和Theano后端一起使用。

6.3. CNTK

6.3.CNTK

Microsoft Cognitive Toolkit (CNTK) is a deep learning framework written in C++ that describes computational steps via a directed graph. It can be used in both Python and C++ programs and is primarily used in developing neural networks. There’s a Keras back end based on CNTK available for use that provides the familiar intuitive abstraction.

微软认知工具包(CNTK)是一个用C++编写的深度学习框架,通过有向图描述计算步骤。它可以在Python和C++程序中使用,主要用于开发神经网络。有一个基于CNTK的Keras后端可供使用,它提供了我们熟悉的直观抽象。

7. Conclusion

7.结语

To sum up, in this tutorial we went through the basics of machine learning, including different categories and workflow. We went through the basics of Spark MLlib as a machine learning library available to us.

总而言之,在本教程中,我们经历了机器学习的基础知识,包括不同的类别和工作流程。我们经历了Spark MLlib作为一个机器学习库可供我们使用的基础知识。

Furthermore, we developed a simple machine learning application based on the available dataset. We implemented some of the most common steps in the machine learning workflow in our example.

此外,我们根据现有的数据集开发了一个简单的机器学习应用。我们在例子中实现了机器学习工作流程中的一些最常见的步骤。

We also went through some of the advanced steps in a typical machine learning project and how Spark MLlib can help in those. Finally, we saw some of the alternative machine learning libraries available for us to use.

我们还经历了一个典型的机器学习项目中的一些高级步骤,以及Spark MLlib如何在这些方面提供帮助。最后,我们看到了一些可供我们使用的替代机器学习库。

As always, the code can be found over on GitHub.

一如既往,代码可以在GitHub上找到over。