1. Overview

1.概述

Modern database systems are tailored to guarantee a set of capabilities such as reliability, consistency, high throughput, and so on by leveraging sophisticated storage engines for writing and reading data.

现代数据库系统是为保证一系列的能力而定制的,如可靠性、一致性、高吞吐量,等等,通过利用复杂的存储引擎来写入和读取数据。

In this tutorial, we’ll dive deep into the internals of the storage engine used by Apache Cassandra, which is designed for write-heavy workloads while maintaining good performance for reads as well.

在本教程中,我们将深入了解Apache Cassandra所使用的存储引擎的内部结构,该引擎专为写量大的工作负载而设计,同时也为读量保持良好的性能。

2. Log-Structured Merge-Tree (LSMT)

2.Log-Structured Merge-Tree(LSMT)

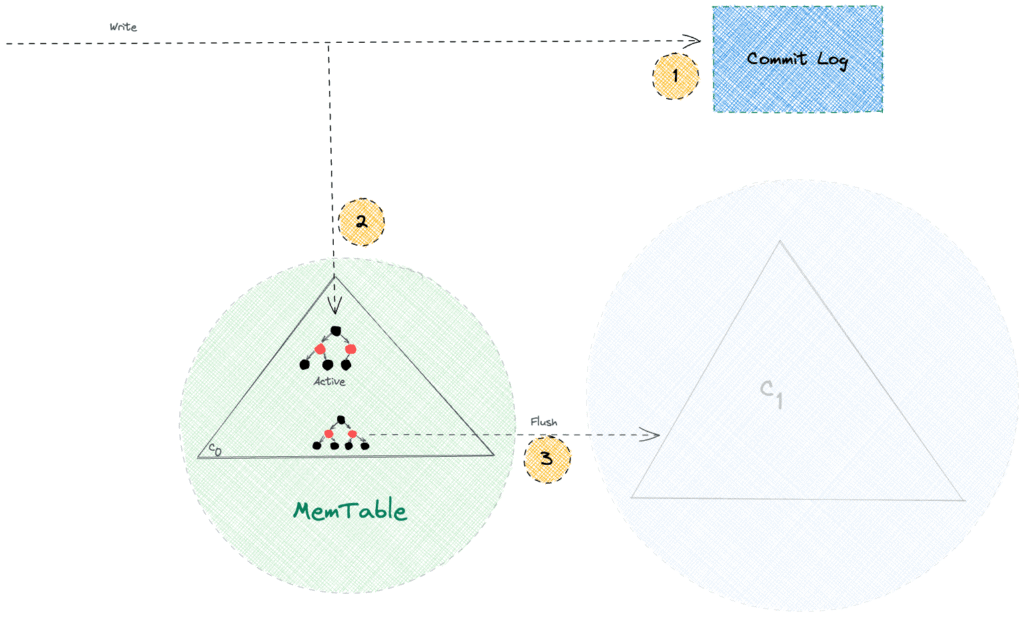

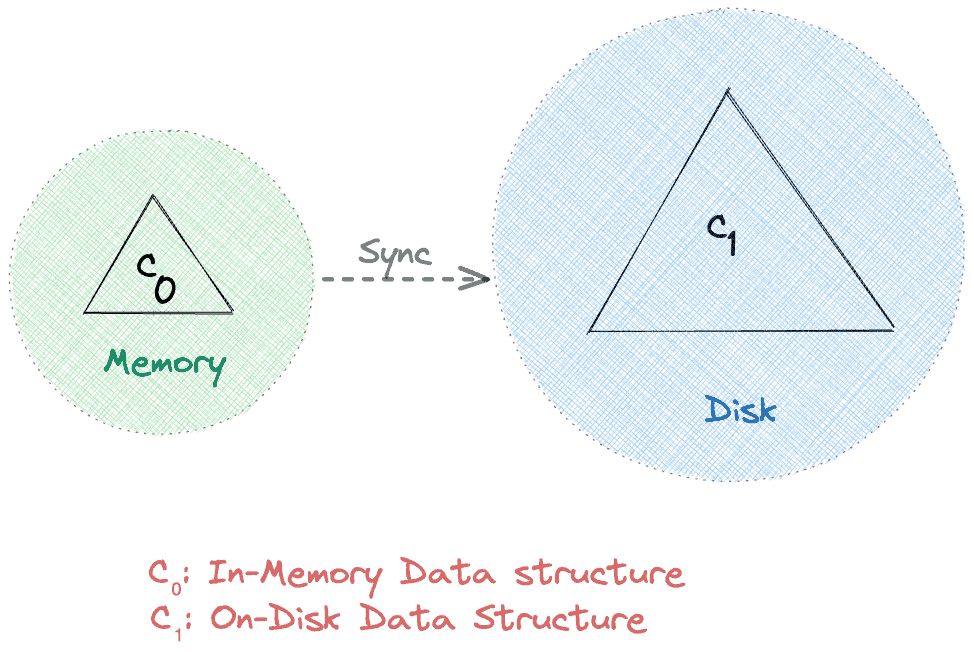

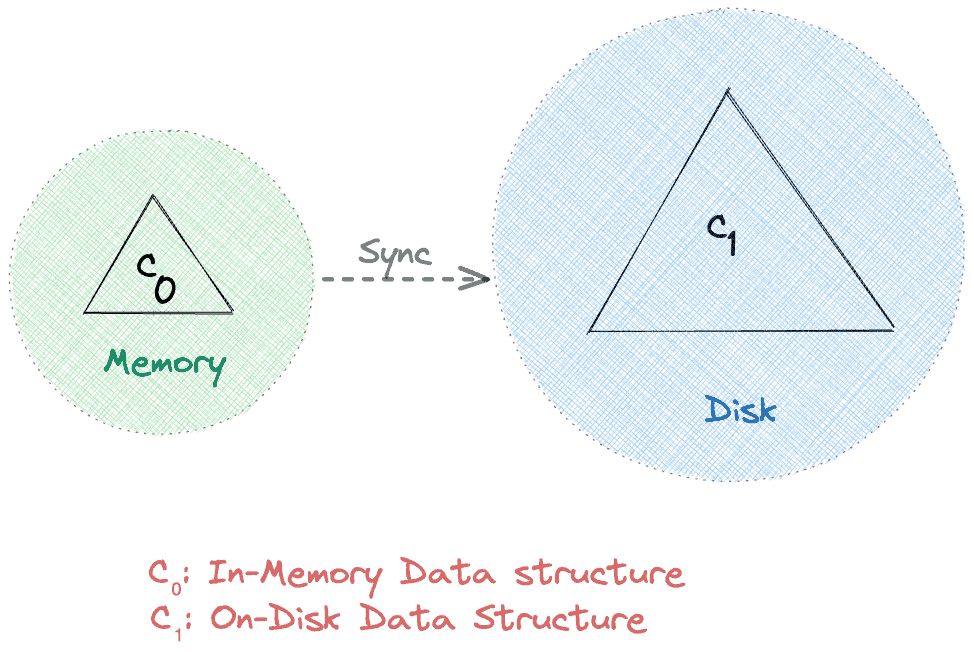

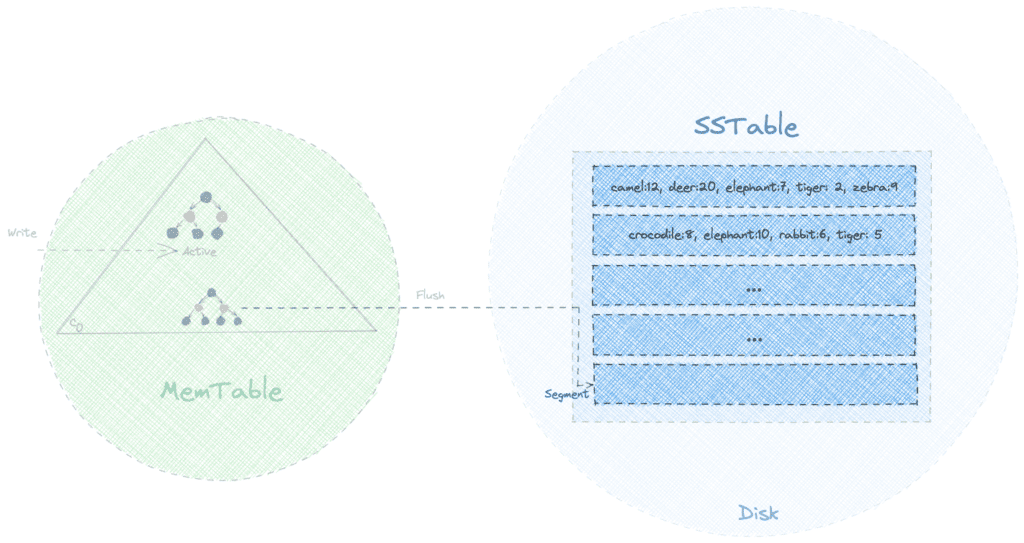

Apache Cassandra leverages a two-level Log-Structured Merge-Tree based data structure for storage. At a high level, there are two tree-like components in such an LSM tree, an in-memory cache component (C0) and an on-disk component (C1):

Apache Cassandra利用一种基于两级Log-Structured Merge-Tree的数据结构进行存储。在高层次上,这样的LSM树有两个树状组件,一个是内存缓存组件(C0),一个是磁盘组件(C1):

Reading and writing directly from memory is usually faster than the disk. Therefore, by design, all requests hit C0 before reaching out to C1. Moreover, a sync operation persists data from C0 to C1 periodically. As a result, it uses network bandwidth efficiently by reducing the I/O operations.

直接从内存读写通常比磁盘快。因此,在设计上,所有的请求在到达C0之前都会先打到C1。此外,同步操作周期性地将数据从C0持续到C1。因此,它通过减少I/O操作有效地使用网络带宽。

In the following sections, we’ll learn more about the C0 and C1 data structures of the two-level LSM tree in Apache Cassandra, commonly known as MemTable and SSTable, respectively.

在下面的章节中,我们将进一步了解Apache Cassandra中两级LSM树的C0和C1数据结构,通常分别被称为MemTable和SSTable。

3. MemTable

3.记忆表

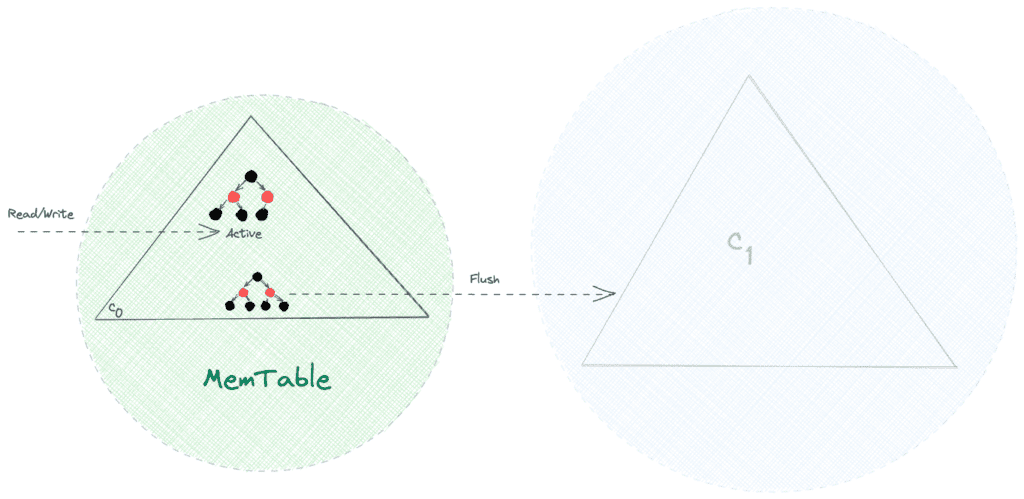

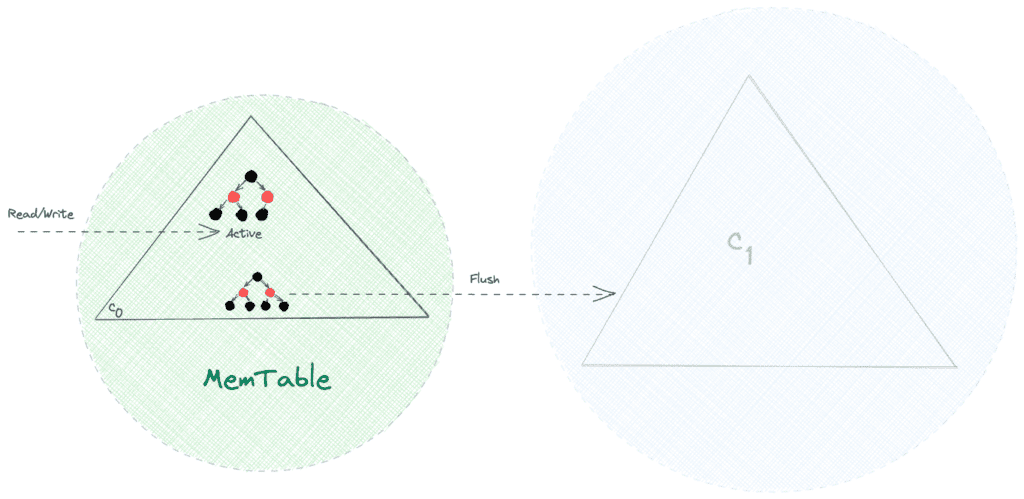

As the name suggests, MemTable is a memory-resident data structure such as a Red-black tree with self-balancing binary search tree properties. As a result, all the read and write operations, namely, search, insert, update, and delete, are achievable with O(log n) time complexity.

顾名思义,MemTable是一个驻留在内存中的数据结构,例如具有自平衡二进制搜索树特性的Red-black树。因此,所有的读写操作,即搜索、插入、更新和删除,都可以用O(log n)的时间复杂度实现。

Being an in-memory mutable data structure, MemTable makes all the writes sequential and allows for fast write operations. Further, due to the typical constraints of physical memory, such as limited capacity and volatile nature, we need to persist data from MemTable to disk:

作为一个内存中的可变数据结构,MemTable使得所有的写操作都是连续的,并允许快速写操作。此外,由于物理内存的典型限制,如有限的容量和易变性,我们需要将数据从MemTable持久化到磁盘:

Once the size of MemTable reaches a threshold value, all the r/w requests switch to a new MemTable, while the old one is discarded after flushing it onto the disk.

一旦MemTable的大小达到一个阈值,所有的r/w请求就会切换到一个新的MemTable,而旧的MemTable在冲到磁盘上之后就会被丢弃。

So far, so good! We can handle a large number of writes efficiently. However, what happens, if the node crashes before a flush operation? Well, it’s simple — we’d lose the data that isn’t flushed to disk yet.

到目前为止,一切都很好!我们可以有效地处理大量的写操作。然而,如果节点在刷新操作之前崩溃了,会发生什么?嗯,这很简单–我们会丢失尚未刷新到磁盘的数据。

In the next section, we’ll see how Apache Cassandra solves this problem by using the concept of Write-Ahead Logs (WAL).

在下一节中,我们将看到Apache Cassandra如何通过使用Write-Ahead Logs(WAL)的概念来解决这个问题。

4. Commit Log

4.承诺日志

Apache Cassandra defers the flush operation that persists data from memory to disk. Hence, an unexpected node or process crash can lead to data loss.

Apache Cassandra推迟了将数据从内存持久化到磁盘的刷新操作。因此,一个意外的节点或进程崩溃会导致数据丢失。

Durability is a must-have capability for any modern database system, and Apache Cassandra is no exception. It guarantees durability by ensuring all writes persist onto the disk in an append-only file called Commit Log. Thereafter, it uses MemTable as a write-back cache in the write-path:

持久性是任何现代数据库系统必须具备的能力,Apache Cassandra也不例外。它通过确保所有的写操作在磁盘上持续存在于一个名为Commit Log的纯附加文件中来保证持久性。此后,它使用MemTable作为写路径中的回写缓存。

We must note that append-only operations are fast as they avoid random seeks on the disk. So, the Commit Log brings durability capability without compromising the performance of writes. Further, Apache Cassandra refers to the Commit Log only during a crash recovery scenario, while the regular read requests don’t go to the Commit Log.

我们必须注意到,只附加的操作是快速的,因为它们避免了磁盘上的随机搜索。所以,Commit Log带来了持久性能力,而不影响写的性能。此外,Apache Cassandra只在崩溃恢复的情况下参考Commit Log,而普通的读取请求不会进入Commit Log。

5. SSTable

5.证券公司

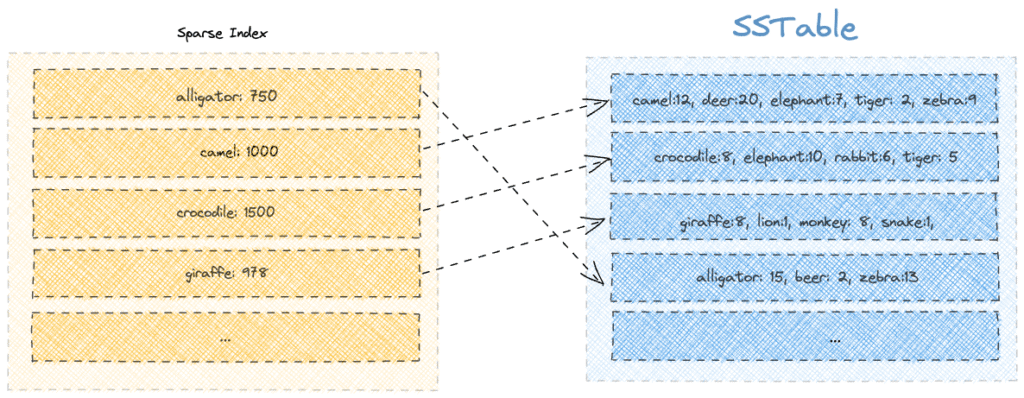

Sorted String Table (SSTable) is the disk-resident component of the LSM tree used by the Apache Cassandra storage engine. It derives its name from a similar data structure, first used by Google’s BigTable database, and indicates that the data is available in a sorted format. Generally speaking, each flush operation from the MemTable generates a new immutable segment in the SSTable.

分类字符串表(SSTable)是Apache Cassandra存储引擎使用的LSM树的磁盘驻留组件。它的名字来源于一个类似的数据结构,首先由谷歌的BigTable数据库使用,并表示数据是以排序的格式提供的。一般来说,MemTable的每一次刷新操作都会在SSTable中生成一个新的不可变的段。

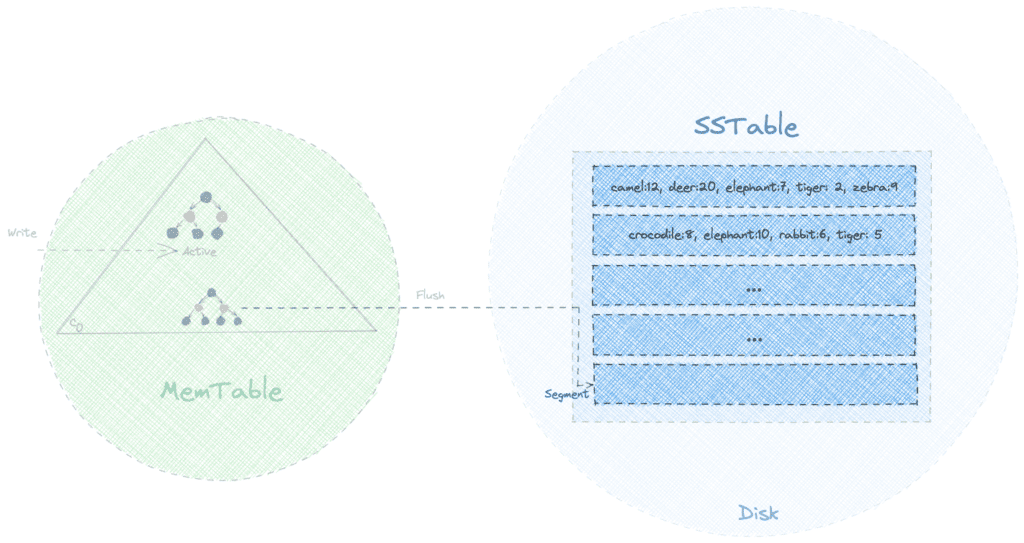

Let’s try to visualize how an SSTable would look while containing data about the count of various animals kept in a zoo:

让我们尝试可视化一个SSTable的样子,同时包含动物园中饲养的各种动物的数量数据:

Though the segments are sorted by keys, nevertheless, the same key could be present in multiple segments. So if we’ve to look for a specific key, we need to start our search from the latest segment and return the result as soon as we find it.

虽然片段是按键排序的,但是,同一个键可能出现在多个片段中。因此,如果我们要寻找一个特定的键,我们需要从最新的片段开始搜索,一旦找到,就立即返回结果。

With such a strategy, read operations for the recently written keys would be fast. However, in the worst case, the algorithm executes with an O(N*log(K)) time complexity, where N is the total number of segments, and K is the segment size. As K is a constant, we can say that the overall time complexity is O(N), which is not efficient.

有了这样的策略,对最近写入的钥匙的读取操作就会很快。然而,在最坏的情况下,该算法执行的时间复杂度为O(N*log(K)),其中N是段的总数,K是段的大小。由于K是一个常数,我们可以说总体时间复杂度是O(N),这并不高效。

In the following few sections, we’ll learn how Apache Cassandra optimizes the read operations for SSTable.

在下面几节中,我们将学习Apache Cassandra如何优化SSTable的读取操作。

6. Sparse Index

6.稀疏指数

Apache Cassandra maintains a sparse index to limit the number of segments it needs to scan while looking for a key.

Apache Cassandra维护了一个稀疏索引,以限制它在寻找一个键时需要扫描的段的数量。

Each entry in the sparse index contains the first member of a segment, along with its page offset position on the disk. Further, the index is maintained in memory as a B-Tree data structure so that we can search for an offset in the index with O(log(K)) time complexity.

稀疏索引中的每个条目都包含了一个段的第一个成员,以及它在磁盘上的页偏移位置。此外,该索引在内存中以B-Tree数据结构的形式进行维护,因此我们可以用O(log(K))的时间复杂度来搜索索引中的偏移。

Let’s say we want to search for the key “beer.” We’ll start by searching for all the keys in the sparse index that’d come before the word “beer.” After that, using the offset value, we’ll look into only a limited number of segments. In this case, we’ll look into the fourth segment where the first key is “alligator”:

比方说,我们想搜索 “啤酒 “这个键。我们将开始搜索稀疏索引中所有在 “啤酒 “这个词之前的键。之后,使用偏移值,我们将只查找有限数量的片段。在这个例子中,我们将搜索第四段,其中第一个键是 “alligator”。

On the other hand, if we had to search for an absent key such as “kangaroo,” we’d have to look through all the segments in vain. So, we realize that using a sparse index optimizes the search to a limited extent.

另一方面,如果我们要搜索一个不存在的键,如 “袋鼠”,我们就不得不徒劳地翻阅所有的片段。因此,我们意识到,使用稀疏索引可以在有限的范围内优化搜索。

Moreover, we should note that SSTable allows the same key to be present in different segments. Therefore, with time, more and more updates will happen for the same key, thereby creating duplicate keys in sparse index too.

此外,我们应该注意,SSTable允许同一个键出现在不同的段中。因此,随着时间的推移,越来越多的更新会发生在同一个键上,从而也会在稀疏索引中产生重复的键。

In the following sections, we’ll learn how Apache Cassandra addresses these two problems with the help of bloom filters and compaction.

在下面的章节中,我们将学习Apache Cassandra如何借助于Bloom过滤器和压缩来解决这两个问题。

7. Bloom Filter

7.布鲁姆过滤器

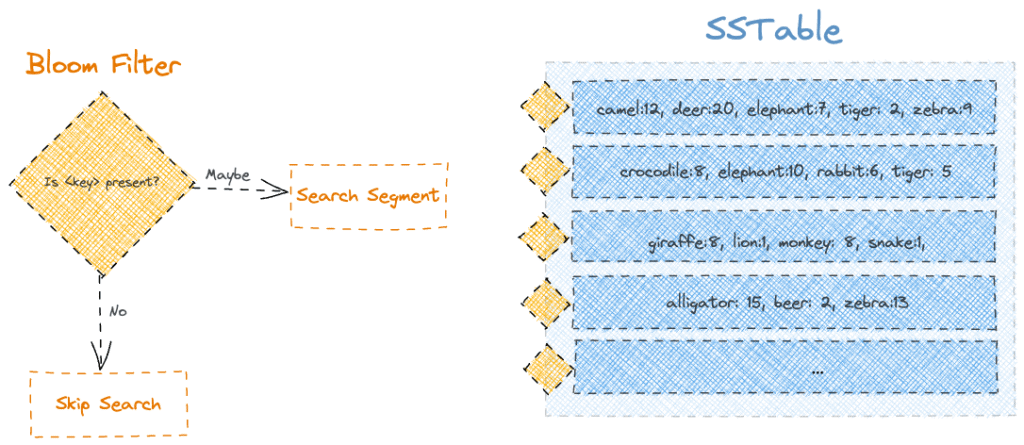

Apache Cassandra optimizes the read queries using a probabilistic data structure known as a bloom filter. Simply put, it optimizes the search by first performing a membership check for a key using a bloom filter.

Apache Cassandra 使用被称为bloom filter的概率数据结构来优化读取查询。简单地说,它通过首先使用bloom过滤器对一个键执行成员检查来优化搜索。

So, by attaching a bloom filter to each segment of the SSTable, we’d get significant optimizations for our read queries, especially for keys that aren’t present in a segment:

因此,通过给SSTable的每一个段附加一个Bloom filter,我们可以对我们的读取查询进行显著的优化,特别是对于那些不存在于一个段中的键。

As bloom filters are probabilistic data structures, we could get “Maybe” as a response, even for missing keys. However, if we get “No” as a response, we can be sure that the key’s definitely missing.

由于Bloom filter是一种概率数据结构,我们可以得到 “也许 “作为响应,即使是缺失的键。然而,如果我们得到 “No “的回应,我们就可以确定这个键肯定是丢失的。

Despite their limitations, we can plan to improve the accuracy of bloom filters by allocating larger storage space for them.

尽管有其局限性,我们可以计划通过为其分配更大的存储空间来提高bloom过滤器的准确性。

8. Compaction

8.压实

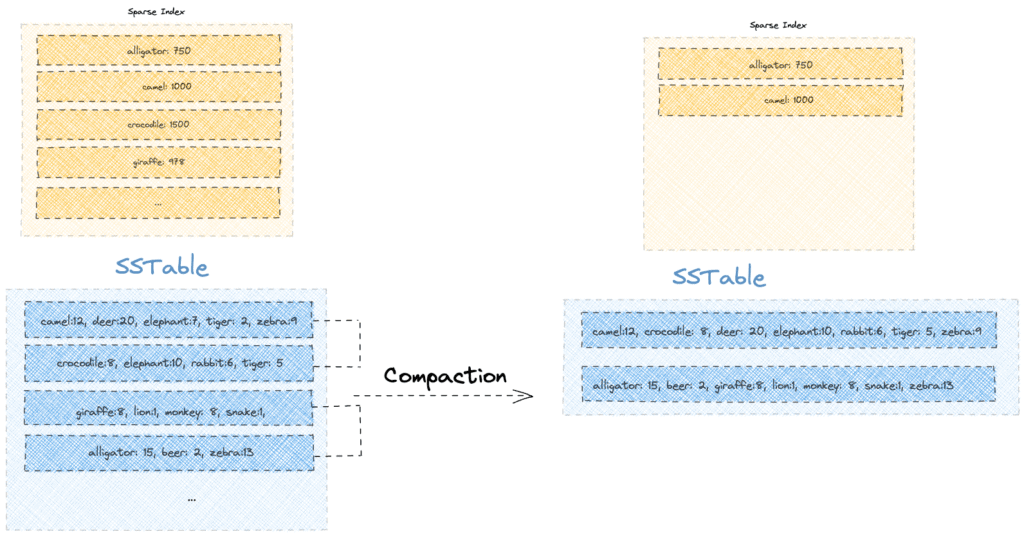

Despite using bloom filters and a sparse index, the performance of the read queries would degrade with time. That’s because the number of segments containing different versions of a key will likely grow with each MemTable flush operation.

尽管使用了Bloom过滤器和稀疏索引,读取查询的性能会随着时间的推移而降低。这是因为每次MemTable刷新操作时,包含不同版本的键的段的数量可能会增加。

To solve the issue, Apache Cassandra runs a background process of compaction that merges smaller sorted segments into larger segments while keeping only the latest value for each key. So, the compaction process gives the dual benefit of faster reads with lesser storage.

为了解决这个问题,Apache Cassandra 在后台运行一个压缩过程,将较小的排序段合并成较大的段,同时只保留每个键的最新值。因此,压实过程带来了双重好处,即以更少的存储量实现更快的读取。

Let’s see what a single run of compaction would look like on our existing SSTable:

让我们看看在我们现有的SSTable上进行一次压实会是什么样子。

We notice that the compaction operation reclaimed some space by keeping only the latest version. For instance, old versions of keys such as “elephant” and “tiger” are no longer present, thereby freeing up disk space.

我们注意到,压缩操作只保留了最新版本,从而回收了一些空间。例如,诸如 “大象 “和 “老虎 “等键的旧版本不再存在,从而释放出了磁盘空间。

Additionally, the compaction process enables the hard deletion of keys. While a delete operation would mark a key with a tombstone, the actual deletion is deferred until compaction.

此外,压实过程可以实现对键的硬删除。虽然删除操作会用墓碑来标记一个键,但实际的删除被推迟到压实过程。

9. Conclusion

9.结语

In this article, we explored the internal components of Apache Cassandra’s storage engine. While doing so, we learned about advanced data structure concepts such as LSM Tree, MemTable, and SSTable. Moreover, we also learned a few optimization techniques using Write-Ahead Logging, Bloom Filters, Sparse Index, and Compaction.

在这篇文章中,我们探讨了Apache Cassandra存储引擎的内部组件。在此过程中,我们了解了高级数据结构概念,如LSM树、MemTable和SSTable。此外,我们还学习了一些优化技术,如Write-Ahead Logging、Bloom Filters、Sparse Index和Compaction。